Lecture 3

Parallelization in the AI Era

Georgetown University

Fall 2025

Agenda and Goals for Today

- Scaling up and scaling out for AI data pipelines

- Parallelization fundamentals for data processing

- Map and Reduce functions

- Parallel data preparation for AI/ML workloads

- Distributed data processing frameworks (Spark, Ray, Dask)

- Lab Preview: Parallelization with Python

- Use the

multiprocessingmodule - Implement synchronous and asynchronous processing

- Parallel data preprocessing examples

- Use the

- Homework Preview: Parallelization with Python

- Parallel data processing

- Building scalable data pipelines

Looking back

- Continued great use of Slack

- Nice interactions

- Due date reminders:

- Assignment 2: September 17, 2025

- Lab 3: September 17, 2025

- Assignment 3: September 24, 2025

Glossary

| Term | Definition |

|---|---|

| Local | Your current workstation (laptop, desktop, etc.), wherever you start the terminal/console application. |

| Remote | Any machine you connect to via ssh or other means. |

| EC2 | Single virtual machine in the cloud where you can run computation (ephemeral) |

| SageMaker | Integrated Developer Environment where you can conduct data science on single machines or distributed training |

| GPU | Graphics Processing Unit - specialized hardware for parallel computation, essential for AI/ML |

| TPU | Tensor Processing Unit - Google’s custom AI accelerator chips |

| Ephemeral | Lasting for a short time - any machine that will get turned off or place you will lose data |

| Persistent | Lasting for a long time - any environment where your work is NOT lost when the timer goes off |

Parallelization

Typical real world scenarios

- You need to prepare training data for LLMs by cleaning and deduplicating 100TB of web-scraped text

- You are building a RAG system that requires embedding and indexing millions of documents in parallel

- You need to extract structured data from millions of PDFs using vision models for document AI

- You are preprocessing multimodal datasets with billions of image-text pairs for foundation model training

- You need to run quality filtering on petabytes of Common Crawl data for training dataset curation

- You are generating synthetic training data using LLMs to augment limited real-world datasets

- You need to transform and tokenize text corpora across 100+ languages for multilingual AI

- You are building real-time data pipelines that process streaming data for online learning systems

Data-for-AI Parallel Scenarios

- Web-scale data processing: Filtering 45TB of Common Crawl data for high-quality training examples

- Embedding generation: Creating vector embeddings for 100M documents using sentence transformers

- Data deduplication: Finding near-duplicates in billion-scale datasets using MinHash and LSH

- Synthetic data generation: Using LLMs to generate millions of instruction-following examples

- Data quality assessment: Running parallel quality checks on training data (toxicity, bias, factuality)

- Feature extraction: Extracting features from millions of images, videos, or audio files for AI training

Parallel Programming

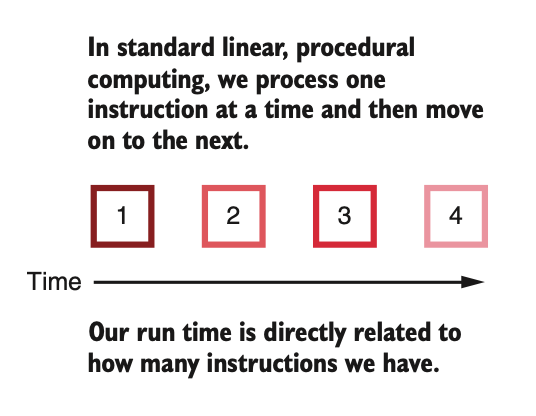

Linear vs. Parallel

Linear/Sequential

- A program starts to run

- The program issues an instruction

- The instruction is executed

- Steps 2 and 3 are repeated

- The program finishes running

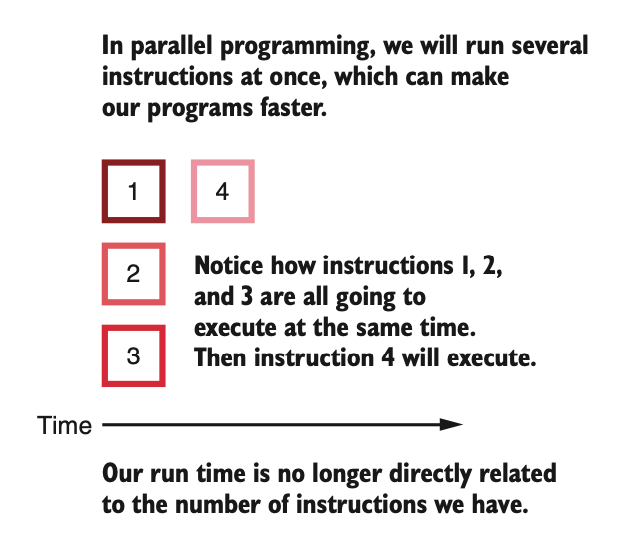

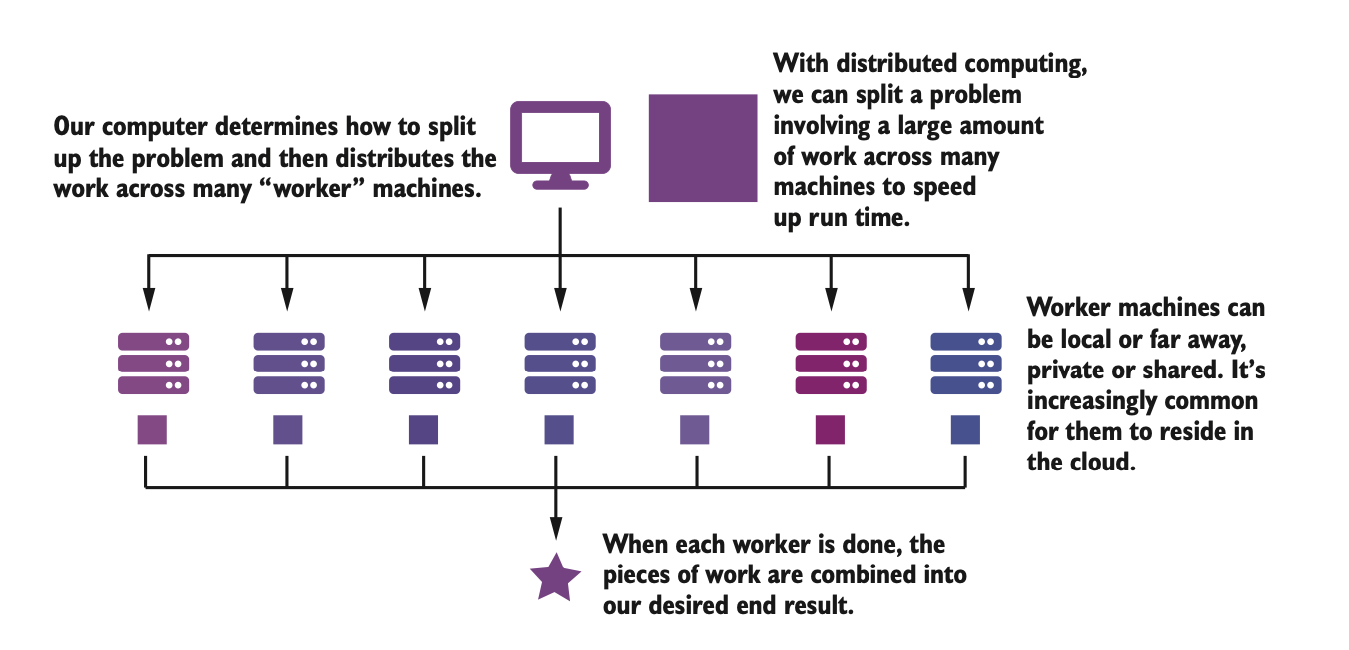

Parallel

- A program starts to run

- The program divides up the work into chunks of instructions and data

- Each chunk of work is executed independently

- The chunks of work are reassembled

- The program finishes running

Linear vs. Parallel

Linear vs. Parallel

From a data engineering for AI perspective

Linear

- The data remains monolithic

- Procedures act on the data sequentially

- Each procedure has to complete before the next procedure can start

- You can think of this as a single pipeline

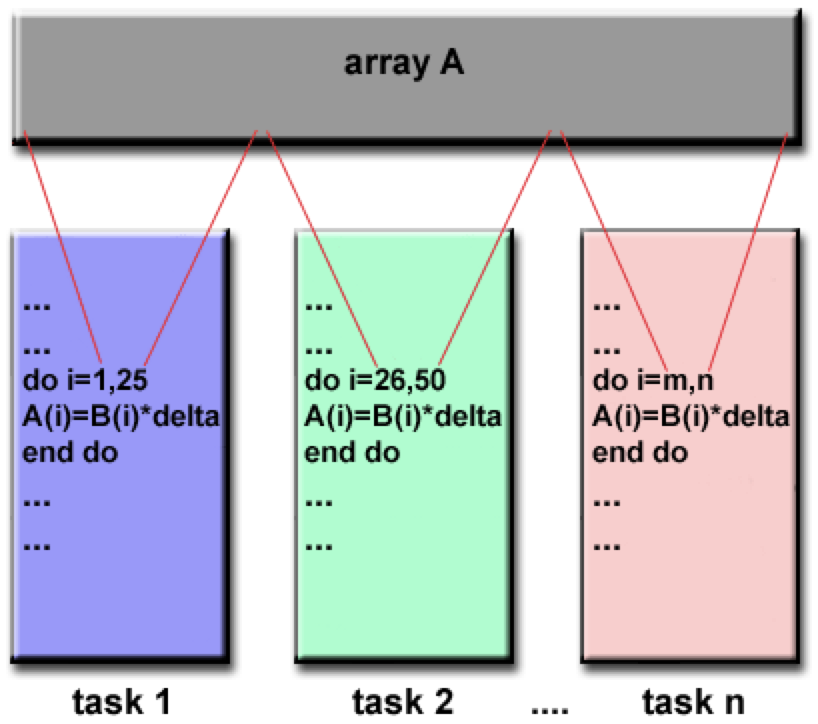

Parallel

- The data can be split up into chunks

- The same procedures can be run on each chunk at the same time

- Or, independent procedures can run on different chunks at the same time

- Need to bring things back together at the end

What are some examples of linear and parallel data science workflows?

Embarrasingly Parallel in Data for AI

It’s easy to speed things up when:

- Processing millions of documents independently for text extraction

- Generating embeddings for each document in a corpus

- Applying the same preprocessing to each image in a dataset

- Running quality filters on individual data samples

- Tokenizing text files independently

- Converting file formats (PDF to text, audio to features)

- Validating and cleaning individual records

Just run multiple data processing tasks at the same time

Modern Data Processing Tools for AI:

- Apache Spark: Distributed data processing at scale

- Ray Data: Scalable data preprocessing for ML

- Dask: Parallel computing with Python APIs

- Polars: Fast DataFrame library with parallel execution

Embarrasingly Parallel

The concept is based on the old middle/high school math problem:

If 5 people can shovel a parking lot in 6 hours, how long will it take 100 people to shovel the same parking lot?

Basic idea is that many hands (cores/instances) make lighter (faster/more efficient) work of the same problem, as long as the effort can be split up appropriately into nearly equal parcels

Embarassingly parallel

- If you can truly split up your problem into multiple independent parts, then you can often get linear speedups with the number of parallel components (to a limit)

- The more cores you use and the more you parallelize, the more you incur communication overhead and decrease available RAM, so the speedup is almost certainly sub-linear, i.e. for a 4-core machine you’ll probably get a 3-4x speedup, but rarely a full 4x speedup1

- The question often is, which part of your problem is embarassingly parallel?

- Amdahl’s law (which we’ll see in a few slides) shows how parallelization can benefit overall if a large proportion of the problem is parallelizable

- It’s not all milk and honey. Setting up, programming, evaluating, debugging parallel computations requires better infrastructure and more expertise.

When might parallel programming not be more efficient?

Some limitations in Data Processing for AI

You can get speedups by parallelizing data operations, but

- Data skew: Uneven distribution of data can create bottlenecks (e.g., one partition has 90% of data)

- I/O bound operations: Reading/writing to storage can limit parallelization benefits

- Memory overhead: Loading large models (like BERT) on each worker for feature extraction

- Deduplication challenges: Some operations like global deduplication require data shuffling

- Ordering dependencies: Maintaining sequence order in time-series or conversational data

Data-Specific Challenges

- Data quality issues: Bad data in parallel pipelines can be hard to trace

- Resource management: Large models (BERT, GPT) need significant memory per worker

- Consistency: Ensuring all workers use same preprocessing versions

Making sure that we can get back all the pieces needs monitoring

- Failure tolerance and protections (Spark checkpointing)

- Proper collection and aggregation of the processed data

- Data validation after parallel processing

Amdahl’s Law in Data Processing

\[ \lim_{s\rightarrow\infty} S_{latency} = \frac{1}{1-p} \]

Data Pipeline Examples: - If 80% is parallel (processing) and 20% is sequential (I/O), max speedup = 5x - If 95% is parallel (embedding generation), max speedup = 20x

Real Data Processing Speedups:

- Document processing: Near-linear up to 100s of workers

- Embedding generation: Linear with number of GPUs

- Data deduplication: Sub-linear due to shuffling overhead

Pros and cons of parallelization for AI Data

Yes - Parallelize These

Data Preparation: - Text extraction from documents (PDFs, HTML) - Tokenization of text corpora - Image preprocessing and augmentation - Embedding generation for documents - Data quality filtering and validation - Format conversions (audio → features) - Web scraping and data collection - Synthetic data generation

Data Processing: - Batch inference on datasets - Feature extraction at scale - Data deduplication (local) - Parallel chunk processing

No - Keep Sequential

Order-Dependent: - Conversation threading - Time-series preprocessing - Sequential data validation - Cumulative statistics

Global Operations: - Global deduplication - Cross-dataset joins - Sorting entire datasets - Computing exact quantiles

Small Data: - Config file processing - Metadata operations - Single document processing

For data operations in the “No” column, they often require global coordination or maintain strict ordering. However, many can be approximated with parallel algorithms (like approximate deduplication with locality-sensitive hashing or approximate quantiles with t-digest)

Pros and cons of parallelization

Pros

Higher efficiency

Using modern infrastructure

Scalable to larger data, more complex procedures

- proviso procedures are embarassingly parallel

Cons

- Higher programming complexity

- Need proper software infrastructure (MPI, Hadoop, etc)

- Need to ensure right packages/modules are distributed across processors

- Need to account for a proportion of jobs failing, and recovering from them

- Hence, Hadoop/Spark and other technologies

- Higher setup cost in terms of time/expertise/money

There are good solutions today for most of the cons, so the pros have it and so this paradigm is widely accepted and implemented

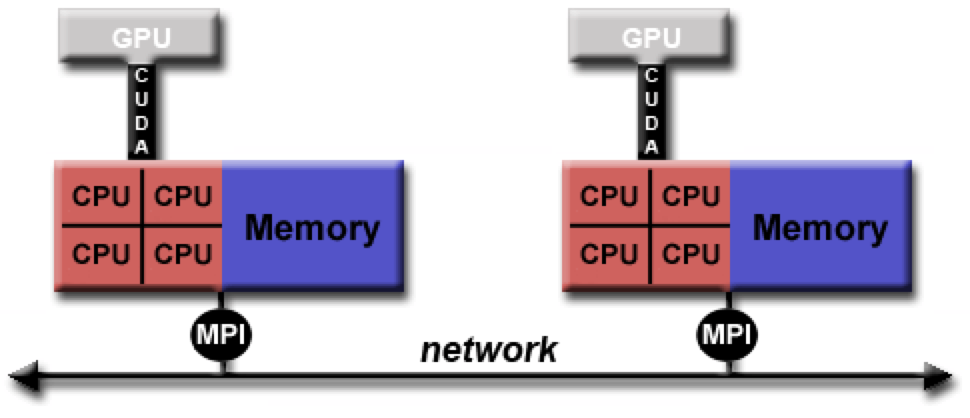

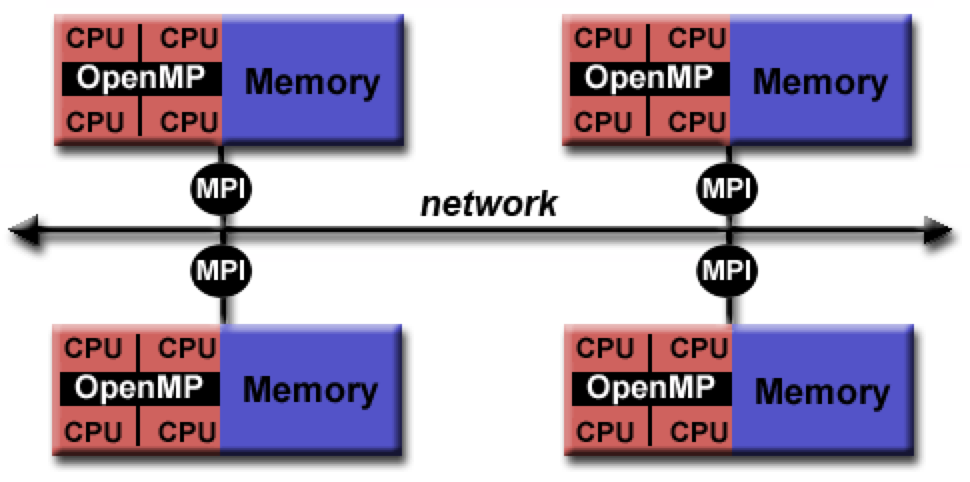

Parallel Programming Models

Distributed memory / Message Passing Model

Data parallel model

Hybrid model

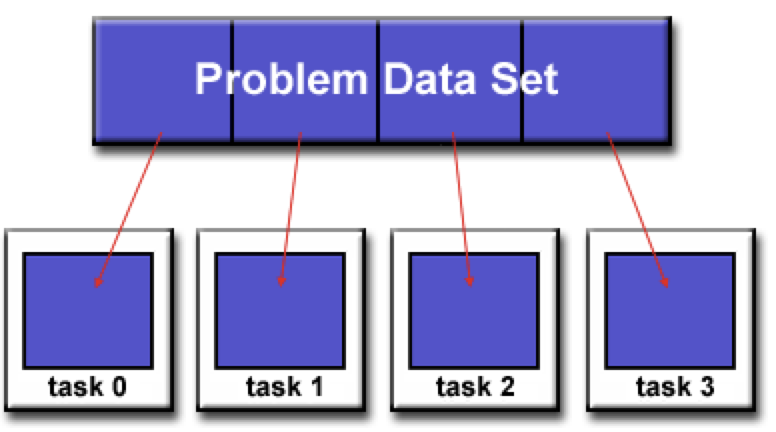

Partitioning data

Designing parallel programs

- Data partitioning

- Communication

- Synchronization / Orchestration

- Data dependencies

- Load balancing

- Input and Output (I/O)

- Debugging

A lot of these components are data engineering and DevOps issues

Infrastructures have standardized many of these and have helped data scientists implement parallel programming much more easily

We’ll see in the lab how the multiprocessing module in Python makes parallel processing on a machine quite easy to implement

Parallel Data Processing for AI

Common AI Data Workloads

- Text preprocessing: Tokenization, cleaning for LLM training

- Embedding generation: Converting documents to vectors

- Data quality filtering: Removing low-quality training samples

- Format conversion: PDF to text, audio to features

- Synthetic data generation: Using LLMs to create training data

- Deduplication: Finding and removing duplicate content

These operations are embarrassingly parallel - each document/sample can be processed independently

Parallel Computing

Functional Programming

Map and Reduce

Components of a parallel programming workflow

- Divide the work into chunks

- Work on each chunk separately

- Reassemble the work

This paradigm is often referred to as a map-reduce framework, or, more descriptively, the split-apply-combine paradigm

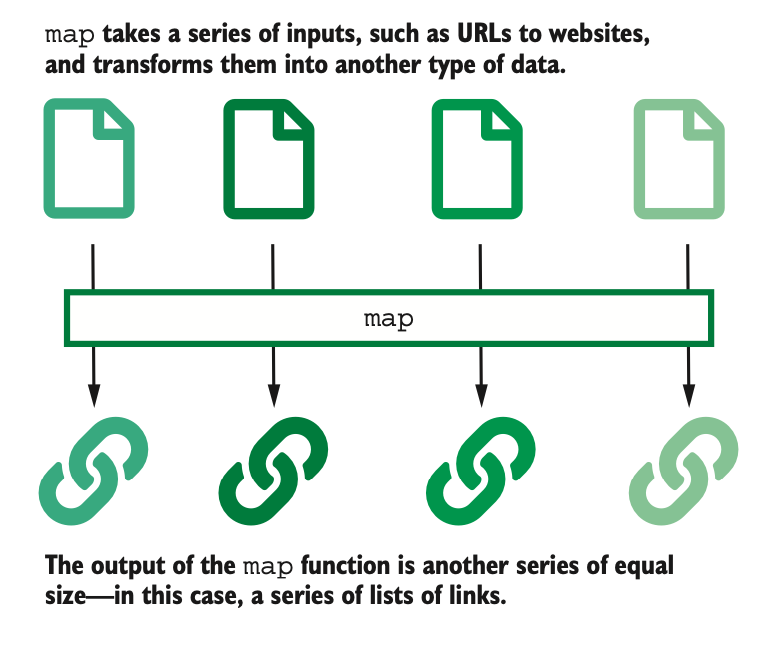

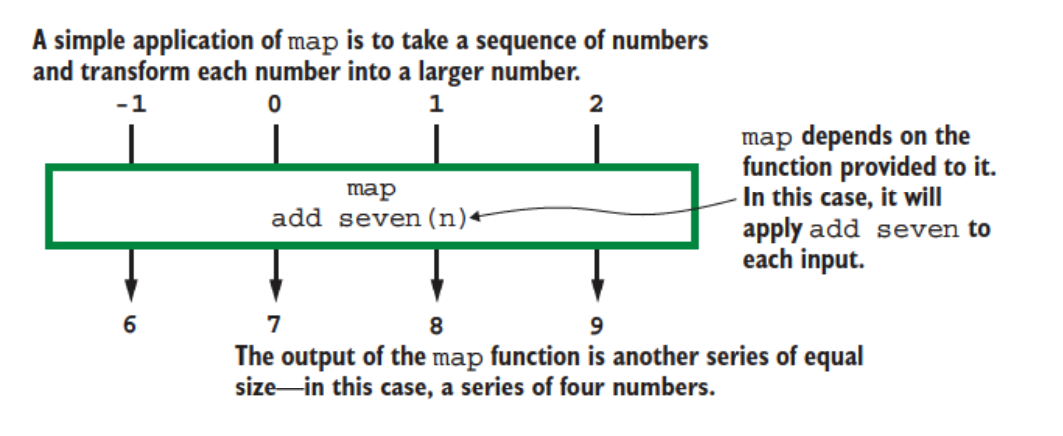

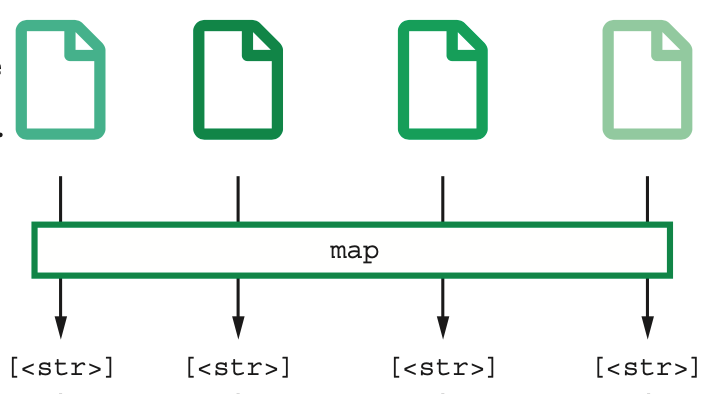

Map

Map

The map operation is a 1-1 operation that takes each split and processes it

The map operation keeps the same number of objects in its output that were present in its input

Map

The operations included in a particular map can be quite complex, involving multiple steps. In fact, you can implement a pipeline of procedures within the map step to process each data object.

The main point is that the same operations will be run on each data object in the map implementation

Map

Some examples of map operations are:

Traditional Data Analytics: 1. Extracting a standard table from online reports from multiple years 1. Extracting particular records from multiple JSON objects 1. Transforming data (as opposed to summarizing it) 1. Run a normalization script on each transcript in a GWAS dataset 1. Standardizing demographic data for each of the last 20 years against the 2000 US population

AI Data Processing (2025): 1. Text processing: Tokenizing millions of documents for LLM training 1. Embedding generation: Converting each document to a vector representation 1. Data extraction: Extracting text from millions of PDFs using OCR 1. Quality filtering: Applying toxicity/bias filters to each text sample 1. Image preprocessing: Resizing and normalizing images for vision models 1. Synthetic data: Generating training examples from prompts using LLMs

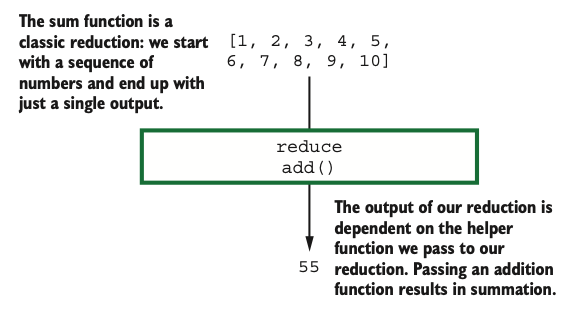

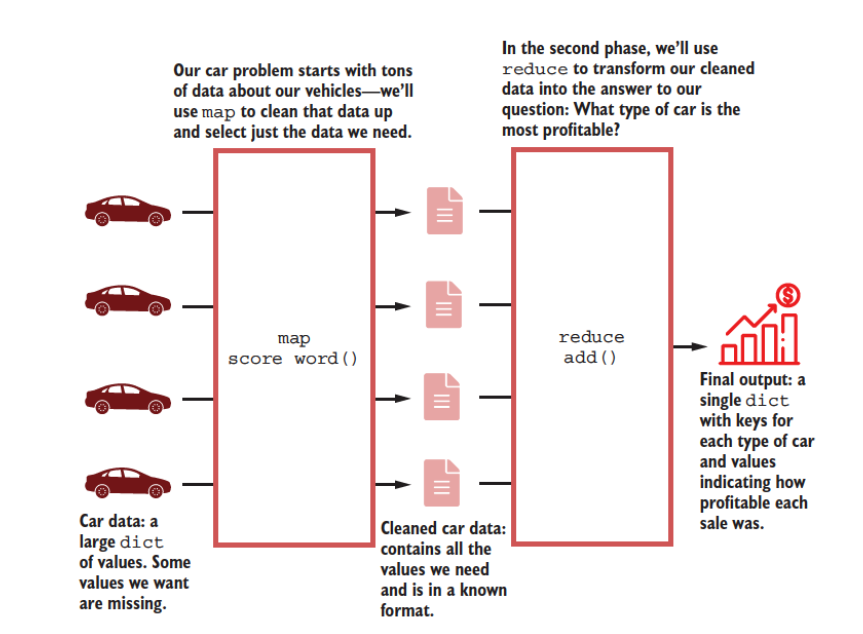

Reduce

Reduce

The reduce operation takes multiple objects and reduces them to a (perhaps) smaller number of objects using transformations that aren’t amenable to the map paradigm.

These transformations are often serial/linear in nature

The reduce transformation is usually the last, not-so-elegant transformation needed after most of the other transformations have been efficiently handled in a parallel fashion by map

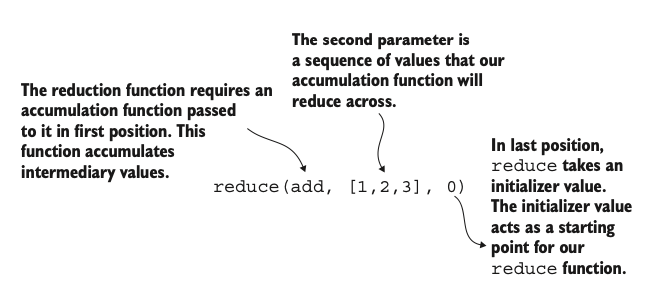

Reduce

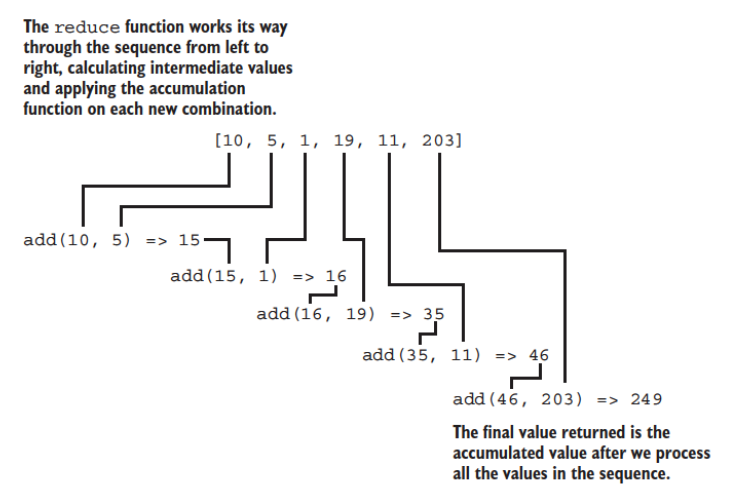

The reduce operation requires

- An accumulator function, that will update serially as new data is fed into it

- A sequence of objects to run through the accumulator function

- A starting value from which the accumulator function starts

Programmatically, this can be written as

Reduce

The reduce operation works serially from “left” to “right”, passing each object successively through the accumulator function.

For example, if we were to add successive numbers with a function called add…

Reduce

Some examples:

Traditional Analytics: 1. Finding the common elements (intersection) of a large number of sets 1. Computing a table of group-wise summaries 1. Filtering 1. Tabulating

AI Data Processing (2025): 1. Deduplication: Finding unique documents across billions of samples 1. Aggregating statistics: Computing dataset quality metrics 1. Vocabulary building: Creating token vocabularies from text corpus 1. Index building: Merging vector embeddings into searchable indices 1. Data validation: Aggregating quality scores across partitions

Map & Reduce

map-reduce

Combining the map and reduce operations creates a powerful pipeline that can handle a diverse range of problems in the Big Data context

Parallelization and map-reduce

Parallelization and map-reduce work hand-in-hand

One of the issues here is, how to split the data in a “good” manner so that the map-reduce framework works well

The Python multiprocessing module

The multiprocessing module

- Focused on single-machine multicore parallelism

- Facilitates:

- process- and thread-based parallel processing

- sharing work over queues

- sharing data among processes

Processes and threads

- A process is an executing program, that is self-contained and has dedicated runtime and memory

- A thread is the basic unit to which the operating system allocates processor time. It is an entity within a process. A thread can execute any part of the process code, including parts currently being executed by another thread.

- A thread will often be faster to spin up and terminate than a full process

- Threads can share memory and data with each other

Python has the Global Interpretor Lock (GIL) which only allows only one thread to interact with Python objects at a time. So the way to parallel process in Python is to do multi-processor parallelization, where we run multiple Python interpretors across multiple processes, each with its own private memory space and GIL.

Some concepts in multiprocessing1

Process

A forked copy of the current process; this creates a new process identifier, and the task runs as an independent child process in the operating system

Pool

Wraps the Process into a convenient pool of workers that share a chunk of work and return an aggregated result

Other methods of parallel processing in Python

- The

joblibmodule - Most

scikit-learnfunctions have implicit parallelization baked in through then_jobsparameter - Ray for distributed AI/ML workloads

- Dask for scaling pandas/numpy operations

- Apache Beam for data pipelines

For example

uses the joblib module to use all available processors (n_jobs=-1) to do the bootstrapping

AI Data Processing Example

from multiprocessing import Pool

from transformers import AutoTokenizer

# Parallel tokenization for LLM training

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

def process_document(text):

# Clean and tokenize text

tokens = tokenizer(text, truncation=True, max_length=512)

return tokens

# Process millions of documents in parallel

with Pool(processes=32) as pool:

tokenized_docs = pool.map(process_document, documents)Async I/O in Python

The need for Async I/O in AI Data Processing

When talking to external systems (databases, APIs, LLM services) the bottleneck is not local CPU/memory but rather the time it takes to receive a response from the external system.

The Async I/O model addresses this by allowing to send multiple request in parallel without having to wait for a response.

AI Use Cases:

- Calling LLM APIs (OpenAI, Anthropic, Bedrock)

- Fetching data from multiple sources

- Parallel web scraping for training data

- Distributed vector database queries

References: asyncio — Asynchronous I/O, Async IO in Python: A Complete Walkthrough

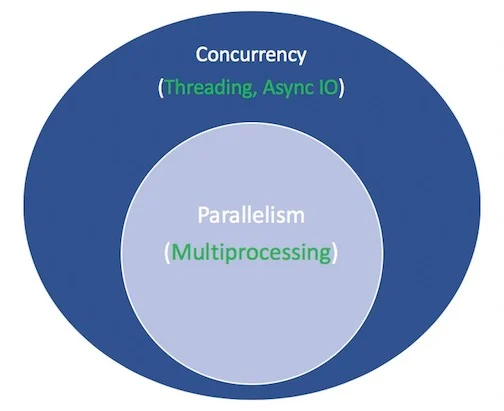

Concurrency and parallelism in Python3

Parallelism: multiple tasks are running in parallel, each on a different processors. This is done through the

multiprocessingmodule.Concurrency: multiple tasks are taking turns to run on the same processor. Another task can be scheduled while the current one is blocked on I/O.

Threading: multiple threads take turns executing tasks. One process can contain multiple threads. Similar to concurrency but within the context of a single process.

How to implement concurrency with asyncio

asynciois a library to write concurrent code using the async/await syntax.Use of

asyncandawaitkeywords. You can call anasyncfunction multiple times while youawaitthe result of a previous invocation.awaitthe result of multipleasynctasks usinggather.The

mainfunction in this example is called acoroutine. Multiplecoroutinescan be runconcurrnetlyas awaitabletasks.

Anatomy of an asyncio Python program

Write a regular Python function that makes a call to a database, an API or any other blocking functionality as you normally would.

Create a coroutine i.e. an

asyncwrapper function to the blocking function usingasyncandawait, with the call to blocking function made using theasyncio.to_threadfunction. This enables the coroutine execution in a separate thread.Create another coroutine that makes multiple calls (in a loop, list comprehension) to the

asyncwrapper created in the previous step and awaits completion of all of the invocations using theasyncio.gatherfunction.Call the coroutine created in the previous step from another function using the

asyncio.runfunction.

import time

import asyncio

def my_blocking_func(i):

# some blocking code such as an api call

print(f"{i}, entry")

time.sleep(1)

print(f"{i}, exiting")

return None

async def async_my_blocking_func(i: int):

return await asyncio.to_thread(my_blocking_func, i)

async def async_my_blocking_func_for_multiple(n: int):

return await asyncio.gather(*[async_my_blocking_func(i) for i in range(n)])

if __name__ == "__main__":

# async version

s = time.perf_counter()

n = 20

brewery_counts = asyncio.run(async_my_blocking_func_for_multiple(n))

elapsed_async = time.perf_counter() - s

print(f"{__file__}, async_my_blocking_func_for_multiple finished in {elapsed_async:0.2f} seconds")AI Example: Parallel LLM API Calls

import asyncio

import aiohttp

async def call_llm_api(prompt, session):

"""Make async call to LLM API"""

async with session.post(

"https://api.example.com/generate",

json={"prompt": prompt, "max_tokens": 100}

) as response:

return await response.json()

async def process_prompts(prompts):

"""Process multiple prompts in parallel"""

async with aiohttp.ClientSession() as session:

tasks = [call_llm_api(prompt, session) for prompt in prompts]

results = await asyncio.gather(*tasks)

return results

# Generate synthetic data using parallel API calls

prompts = ["Generate a customer review for...",

"Create technical documentation for...",

"Write a dialogue about..."] * 100

results = asyncio.run(process_prompts(prompts))Tip

This approach can speed up synthetic data generation by 10-100x compared to sequential API calls

Data Engineering for AI at Scale

The AI Data Challenge

- Volume: LLMs trained on trillions of tokens (petabytes of text)

- Variety: Multimodal data (text, images, audio, video, structured data)

- Velocity: Real-time data pipelines for online learning

- Veracity: Data quality is crucial for model performance

- Value: High-quality data is more important than model architecture

“Data is the new oil, but it needs refining” - especially for AI

Parallel Data Processing Pipeline for AI

# Example: Parallel document processing for RAG system

from multiprocessing import Pool

import pandas as pd

from sentence_transformers import SentenceTransformer

def process_document(doc):

# Extract text

text = extract_text(doc)

# Clean and normalize

cleaned = clean_text(text)

# Chunk into passages

chunks = chunk_text(cleaned, max_length=512)

# Generate embeddings

embeddings = model.encode(chunks)

return {'doc_id': doc.id, 'chunks': chunks, 'embeddings': embeddings}

# Process millions of documents in parallel

with Pool(processes=32) as pool:

results = pool.map(process_document, documents)Common Data Parallel Patterns for AI

1. Embarrassingly Parallel

2. Map-Reduce Pattern

3. Pipeline Pattern

Data Quality at Scale

Quality Checks to Parallelize

- Duplicate detection (MinHash)

- Language identification

- Toxicity filtering

- PII detection and removal

- Format validation

- Schema compliance

- Statistical outlier detection

Quality Metrics for AI Data

- Token distribution analysis

- Vocabulary coverage

- Domain representation

- Bias measurement

- Data freshness

- Annotation agreement

- Synthetic data detection

Distributed Frameworks for AI Data

Apache Spark for Large-Scale Processing

Ray Data for ML Pipelines

Embedding Generation at Scale

- Challenge: Generate embeddings for 100M+ documents

- Solution: Distributed GPU processing

# Distributed embedding generation

from transformers import AutoModel

import torch.distributed as dist

def generate_embeddings_distributed(documents, rank, world_size):

# Each GPU processes a subset

subset = documents[rank::world_size]

model = AutoModel.from_pretrained("sentence-transformers/all-MiniLM-L6-v2")

model = model.to(f"cuda:{rank}")

embeddings = []

for batch in batch_iterator(subset, batch_size=256):

with torch.no_grad():

emb = model.encode(batch)

embeddings.extend(emb)

return embeddingsData Deduplication for AI

Why Deduplication Matters

- Training on duplicates wastes compute

- Can cause overfitting and memorization

- Critical for web-scale datasets

Parallel Deduplication Strategies

# MinHash for approximate deduplication

from datasketch import MinHash, MinHashLSH

def parallel_dedup(documents):

# Step 1: Generate MinHash signatures (parallel)

signatures = parallel_map(generate_minhash, documents)

# Step 2: LSH for finding near-duplicates

lsh = MinHashLSH(threshold=0.9)

for doc_id, sig in signatures:

lsh.insert(doc_id, sig)

# Step 3: Filter duplicates (parallel)

unique_docs = parallel_filter(is_unique, documents, lsh)

return unique_docsSynthetic Data Generation

Parallel Synthetic Data Creation

# Generate training data using LLMs

prompts = [

"Generate a customer service dialogue about...",

"Create a technical documentation for...",

"Write a product review for..."

]

# Parallel generation across multiple API calls

with ThreadPoolExecutor(max_workers=100) as executor:

futures = []

for prompt in prompts:

future = executor.submit(generate_with_llm, prompt)

futures.append(future)

synthetic_data = [f.result() for f in futures]Use Cases

- Augmenting limited datasets

- Creating instruction-tuning data

- Generating test cases

- Privacy-preserving alternatives

Best Practices for Parallel Data Processing

- Profile First: Identify bottlenecks before parallelizing

- Chunk Appropriately: Balance chunk size with overhead

- Handle Failures: Implement retry logic and checkpointing

- Monitor Progress: Use progress bars and logging

- Validate Output: Always verify data quality post-processing

- Version Everything: Track data lineage and transformations

Tools Comparison for AI Data Processing

| Tool | Best For | Scalability | Learning Curve |

|---|---|---|---|

| multiprocessing | Single machine | 10s of cores | Low |

| Spark | Distributed batch | 1000s of nodes | Medium |

| Ray | ML workloads | 100s of nodes | Low |

| Dask | Python-native | 100s of nodes | Low |

| Beam | Stream + batch | 1000s of nodes | High |

Case Study: Building a RAG Dataset

Requirements

- Process 10M documents

- Generate embeddings for each

- Build vector index

- Ensure quality and deduplication

Parallel Solution

# Stage 1: Parallel document processing

processed_docs = ray.data.read_parquet("raw_docs/") \

.map_batches(extract_and_clean) \

.map_batches(chunk_documents)

# Stage 2: Distributed embedding generation

embeddings = processed_docs.map_batches(

generate_embeddings,

num_gpus=1,

batch_size=100

)

# Stage 3: Build index (using FAISS)

index = build_distributed_index(embeddings)Key Takeaways

- Data is the bottleneck in modern AI systems

- Parallel processing is essential for AI-scale data

- Choose the right tool for your scale and use case

- Quality over quantity - parallel quality checks are crucial

- Monitor and validate throughout the pipeline

- Modern frameworks (Spark, Ray, Dask) simplify distributed processing