Lecture 1

Course overview, introduction to big data and the Cloud

Georgetown University

Fall 2023

Are you ready?

ready for BIG DATA?

ready for “The Cloud”?

Agenda for today’s session

- Course and Syllabus Overview

- What is Big Data?

- How do we work today with Big Data?

- How did we get here?

- The modern data platform

- Introduction to

bash - Lab: Linux command line

Bookmark these links!

- Course website: https://gu-dsan.github.io/6000-fall-2023/

- GitHub Organization for your deliverables: https://github.com/gu-dsan6000/

- Slack Workspace: https://dsan6000-fall2023.slack.com

- Instructors email: dsan6000-instructors@georgetown.edu

- Canvas: https://georgetown.instructure.com/courses/172712

These are also pinned on the Slack main channel

Your instructors

Marck Vaisman

- AI & ML Cloud Architect and Data Scientist at Microsoft

- Teaching at GWU since 2015 (9 year) and Georgetown since 2016

- Co-Founder of DataCommunityDC

- R Fanatic

Fun Facts

- I love music and try to play music at the beginning of class, typically EDM. Other genres I love:

- Latin

- Bluegrass

- Chill

- I speak fluent Spanish, I grew up in Venezuela

- Love beer & bourbon

- Goofball

- Westie owner

- I can speak like Donald Duck

Amit Arora

- Principal Solutions Architect - AI/ML at AWS

- Adjunct Professor at Georgetown University

- Multiple patents in telecommunications and applications of ML in telecommunications

Fun Facts

- I am a self-published author https://blueberriesinmysalad.com/

- My book “Blueberries in my salad: my forever journey towards fitness & strength” is written as code in R and Markdown

- I love to read books about health and human performance, productivity, philosophy and Mathematics for ML. My reading list is online!

Anderson Monken

- Data Science Manager at Federal Reserve Board of Governors

- Team uses big data, web development, software development, machine learning, and AI

- Technology and cloud initiatives

- Research focus in international trade and economics

- Adjunct Professor since 2022

- DSAN Program Graduate

Fun Facts

- Amateur car mechanic on several old BMWs

- Can solve a Rubik’s Cube in under a minute

- Canoe’d over 200 miles in Canada

Abhijit Dasgupta

- Data Science Associate Director at AstraZeneca supporting Oncology R&D

- bioinformatics, biomarkers, clinical studies

- autoencoders, survival analysis, signal processing

- Adjunct Professor at Georgetown since 2020

- R and reproducible research evangelist

- Python is cool too!!

- Co-founder of Statistical Programming DC (with Marck Vaisman)

Fun Facts

- I’m a 4th degree black belt in Aikido,

- over 30 years experience providing flyer miles

- Exploring global whiskey, currently on Japan

- Active in community theater, mainly behind but sometimes on-stage.

Your TAs

Isfar Baset

- Earned my Bachelor’s in Computer Science from Grand Valley State University.

- Worked as a Data Analyst for a digital marketing firm (Shift Digital) prior to joining the DSAN program.

- Favorite places to visit around DC: Old Town Alexandria, The Wharf, and Georgetown Waterfront.

- I have a 6-month-old pet kitten named Cairo.

- I love to read books, paint, and watch movies/TV shows during my free time.

Dheeraj Oruganty

- Educational Background: Achieved a Bachelor’s Degree in Computer Science from Jawaharlal Nehru Institute of Technology in Hyderabad.

- Internship: Served as a Machine Learning Intern at Cluzters.ai, contributing to the development of machine vision models tailored for edge devices.

- Personal Interests: Finds joy participating in hikes, meeting new people and embarking on road trips.

- Interesting Tidbits: Explored 6 different countries in the past 90 days.

- Adventurous Journey: Undertook a remarkable 15-hour road trip on a motorcycle in India!

Lianghui Yi

- Undergraduate major in Information Engineering

- Co-founder of a SaaS company in China

- Currently a 1st-year student in DSAN

BIG DATA

Where does it come from?

How is it being created?

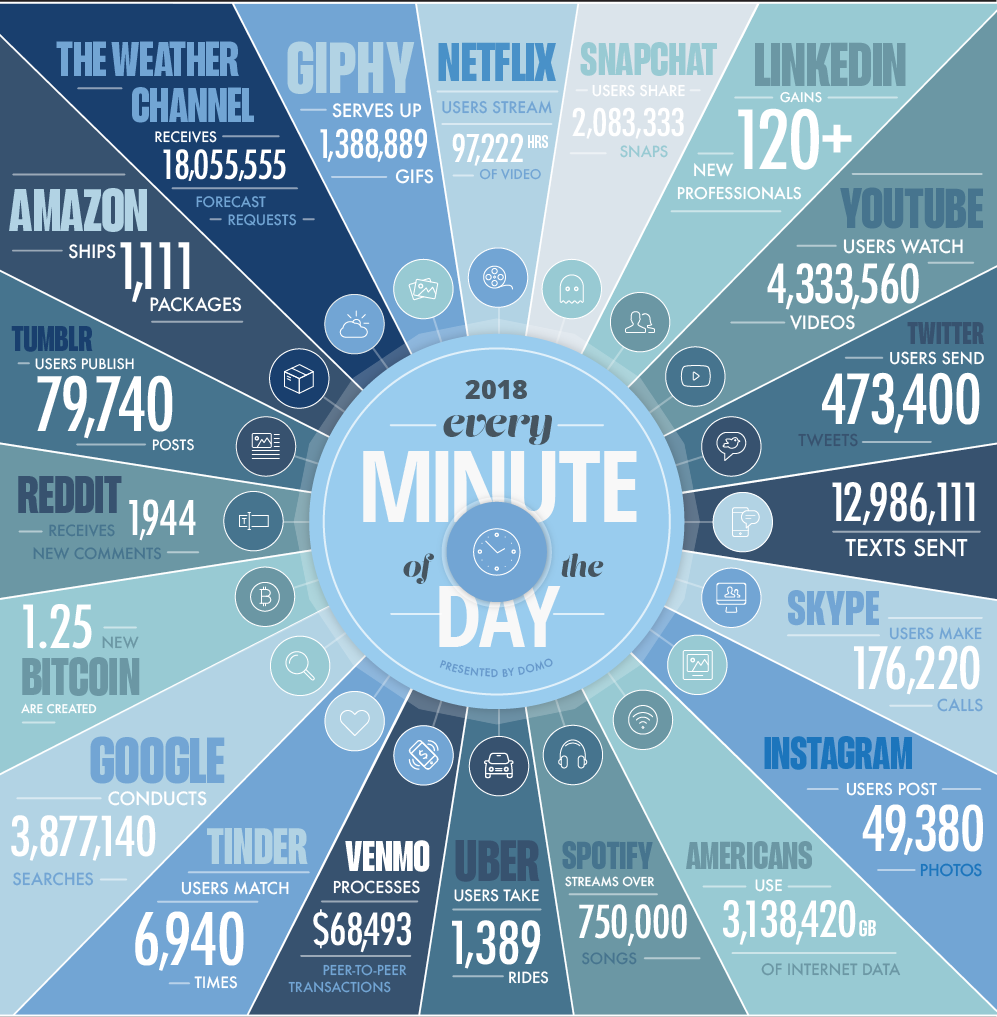

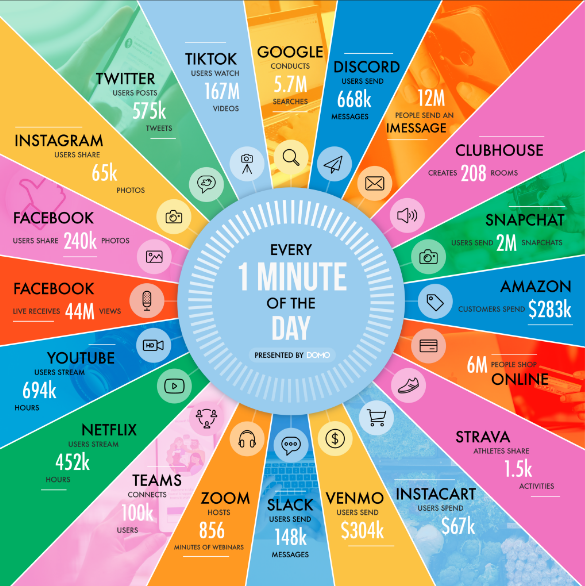

In one minute of time (2018)

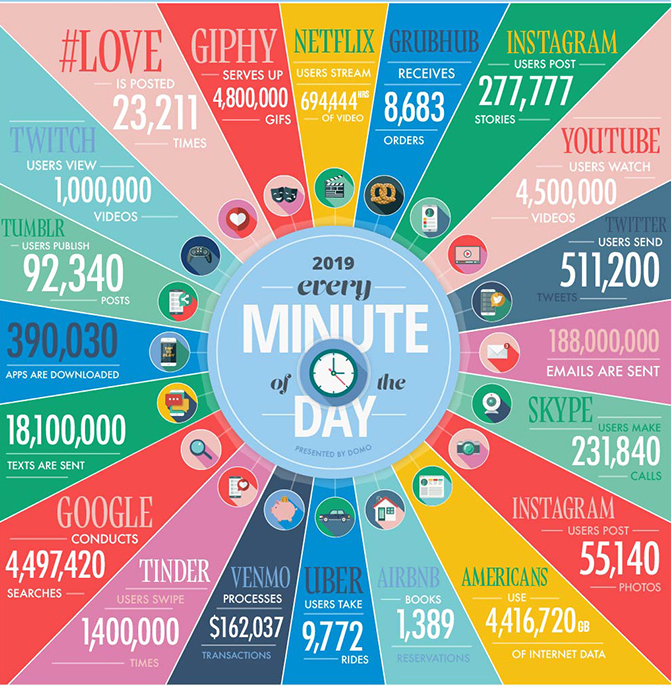

In one minute of time (2019)

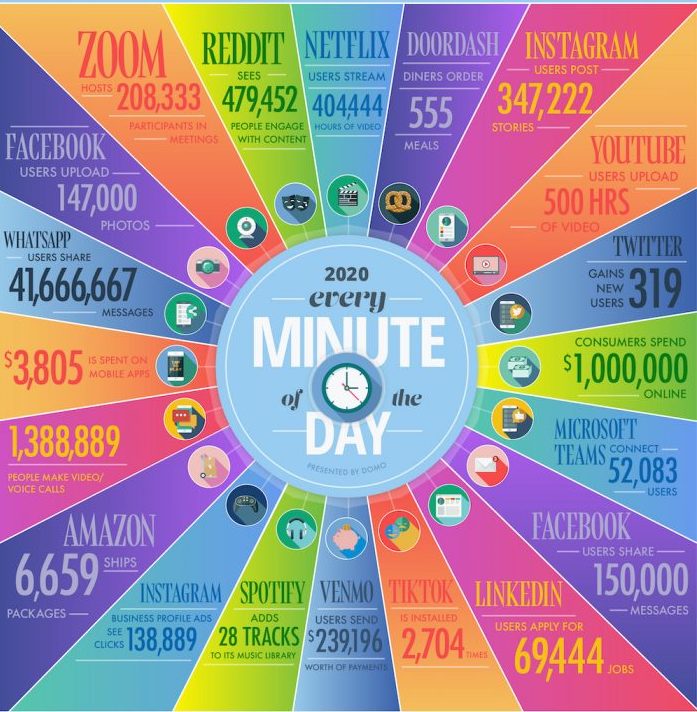

In one minute of time (2020)

In one minute of time (2021)

A lot of data is machine generated and hapenning online

We can record every:

- click

- ad impression

- billing event

- video interaction

- server request

- transaction

- network message

- fault

- and more…

Humans generate a substantial amount (everything is digital)!

- Tiktok

- Tweets

- Videos ()

- Yelp reviews

- Facebook posts

- Stack Overflow posts

- etc.

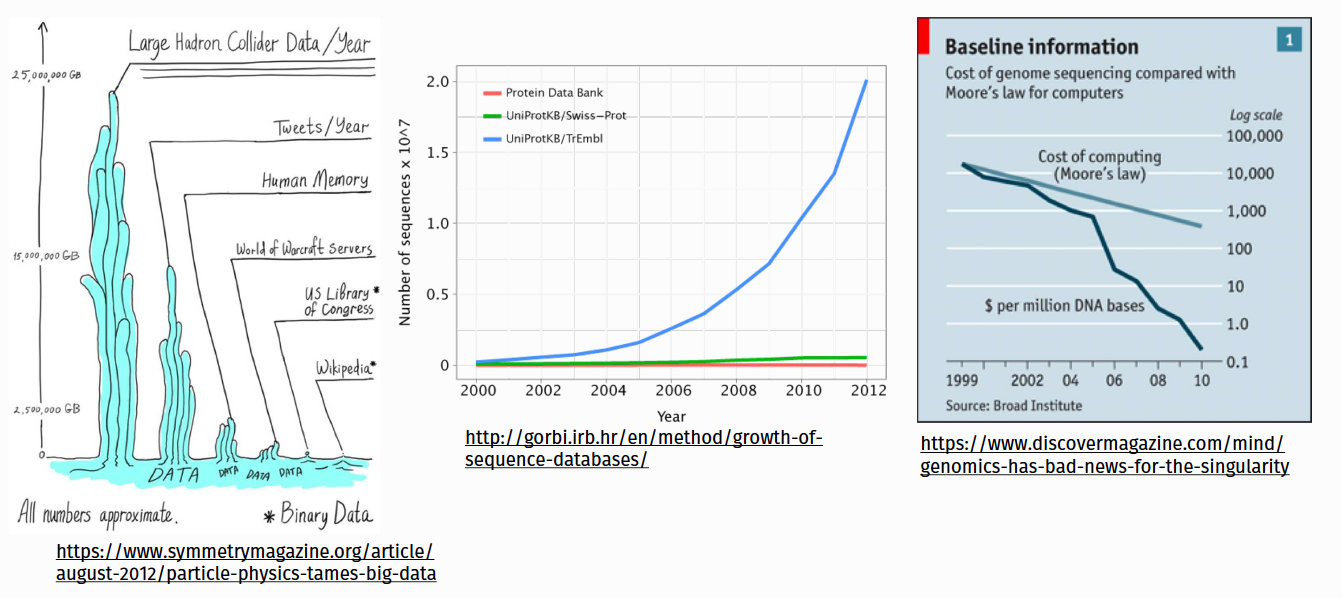

Health and scientific computing generate a lot too!

Big Data is Dead!

More from the MotherDuck Blog

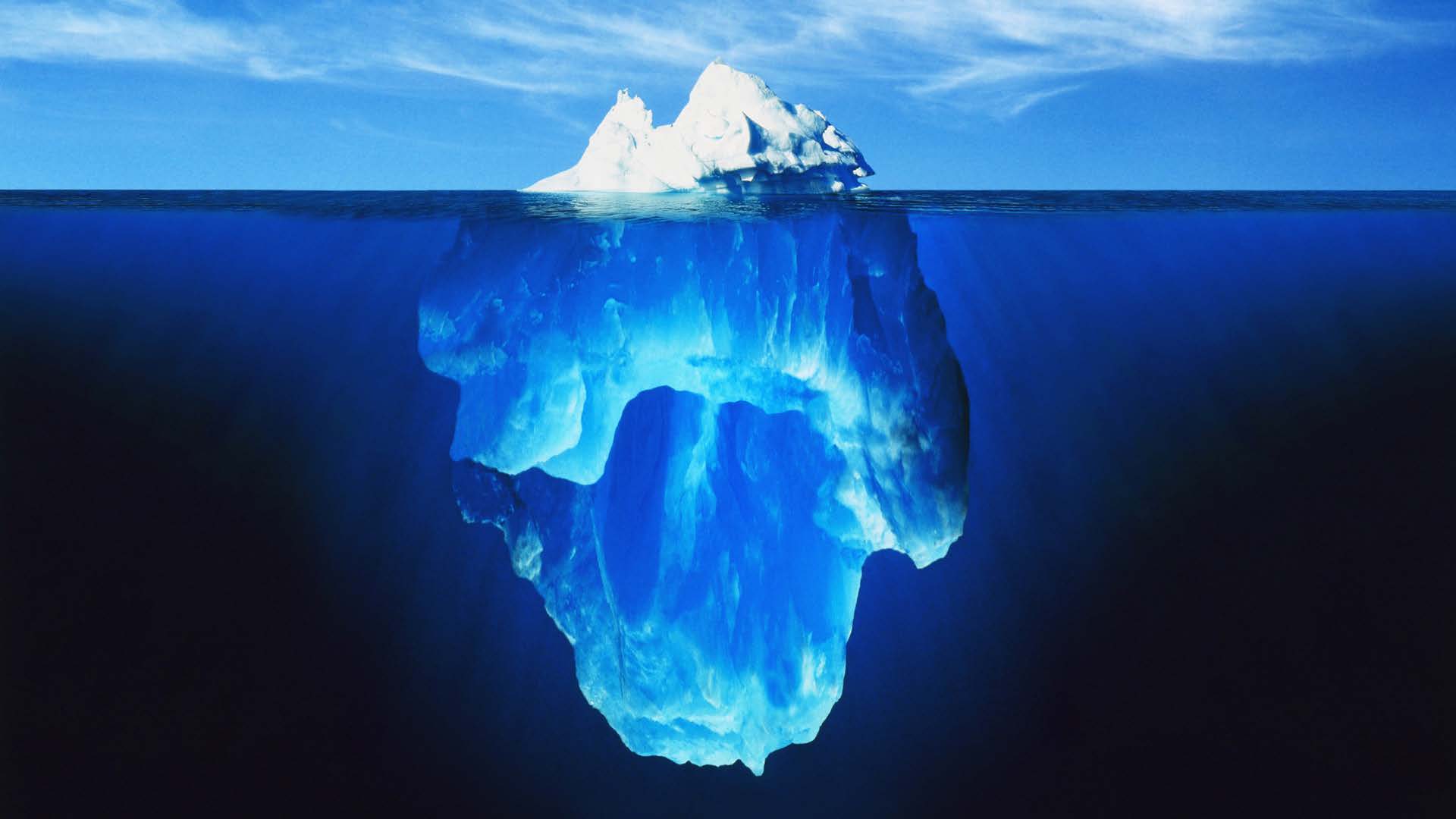

Data is growing exponentially and old ways don’t work

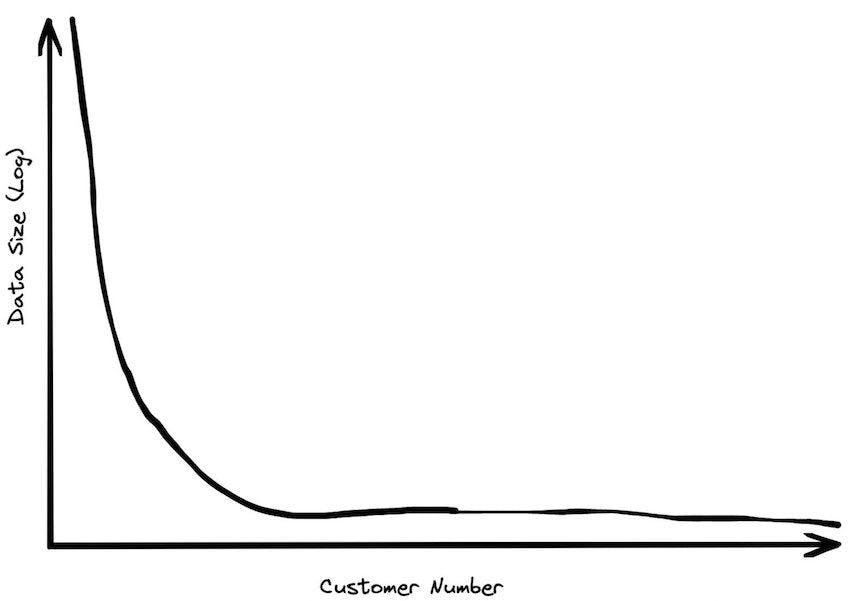

Most organizations don’t really have much data

Data sizes grow faster than compute sizes

Data workloads can for on modern single machines

You are used to working with single-threaded popular data analysis tools that are memory constrained

Traditional Big Data Definitions

Wikipedia

“In essence, is a term for a collection of datasets so large and complex that it becomes difficult to process using traditional tools and applications. Big Data technologies describe a new generation of technologies and architectures designed to economically extract value from very large volumes of a wide variety of data, by enabling high-velocity capture, discover and/or analysis”

O’Reilly

“Big data is when the size of the data itself becomes part of the problem”

EMC/IDC

“Big data technologies describe a new generation of technologies and architectures, designed to economically extract value from very large volumes of a wide variety of data, by enabling high-velocity capture, discovery, and/or analysis.”

IBM: (The famous 3-V’s definition)

- Volume (Gigabytes -> Exabytes)

- Velocity (Batch -> Streaming Data)

- Variety (Structured, Semi-structured, & Unstructured)

Additional V’s

- Variability

- Veracity

- Visualization

- Value

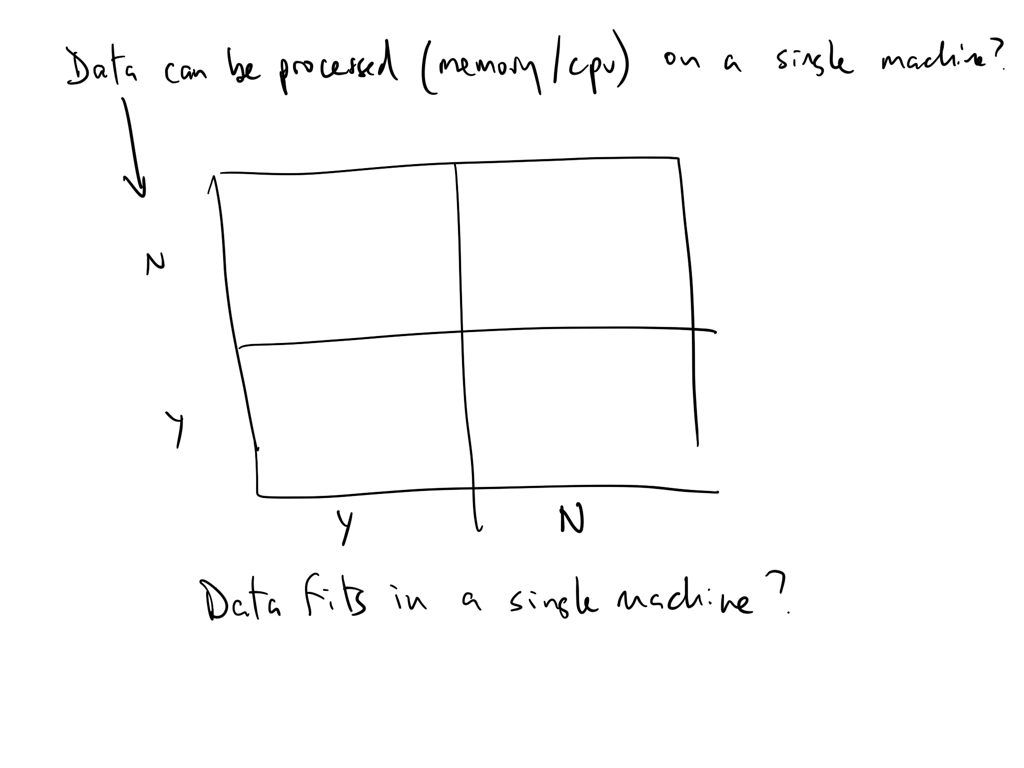

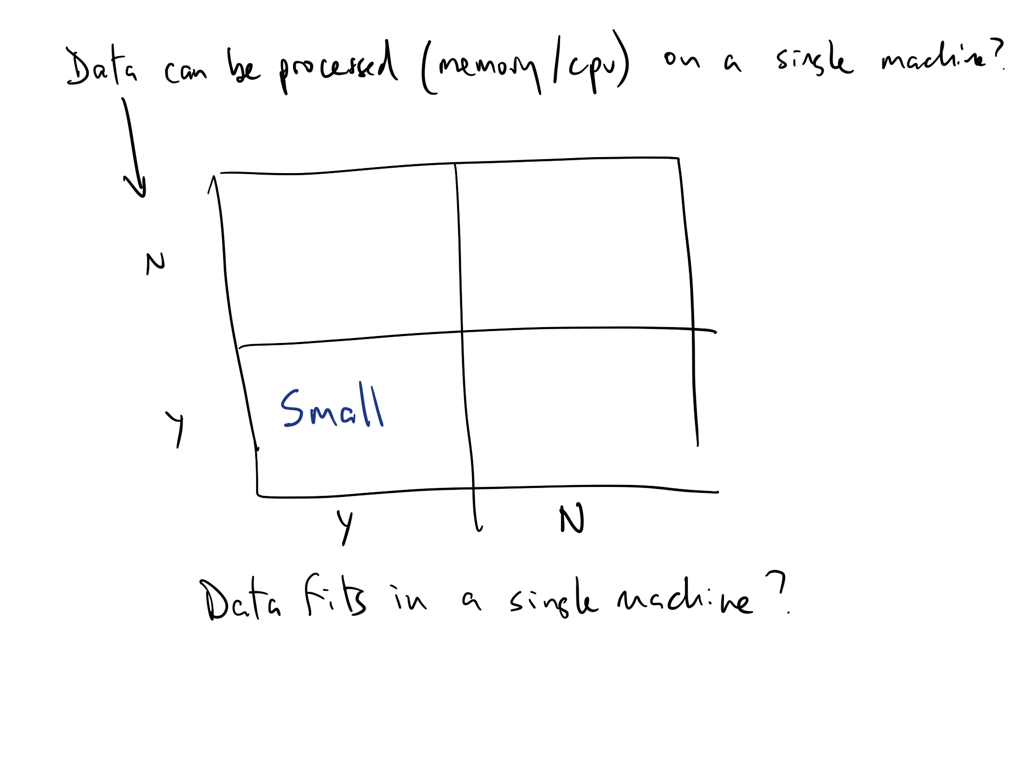

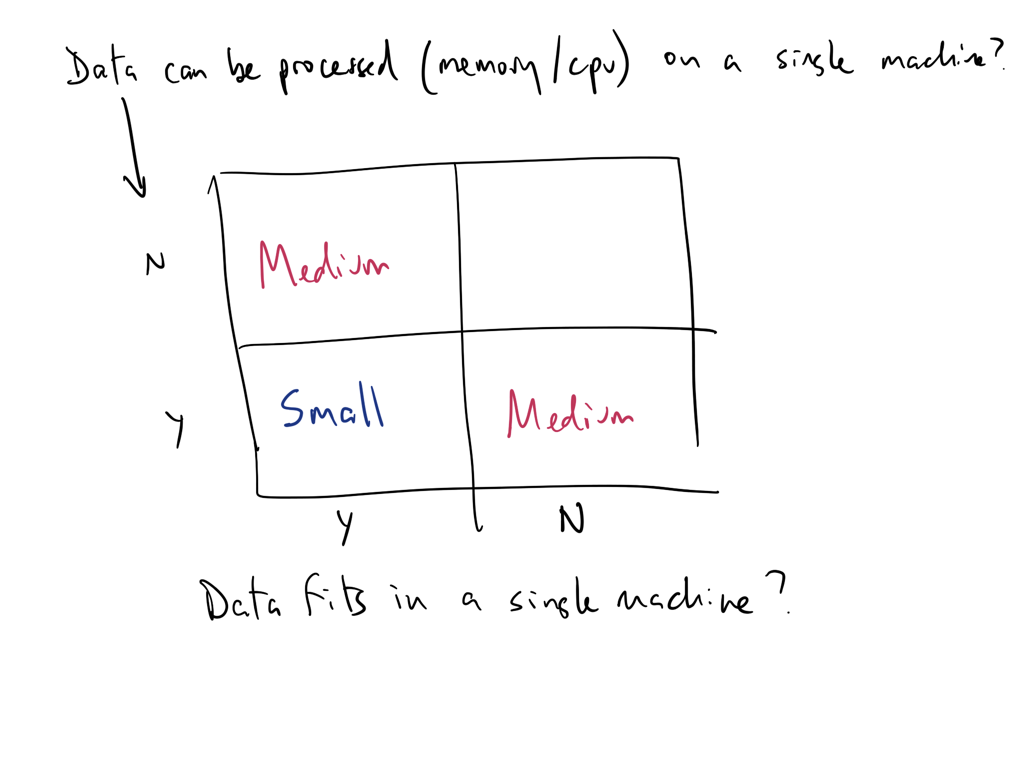

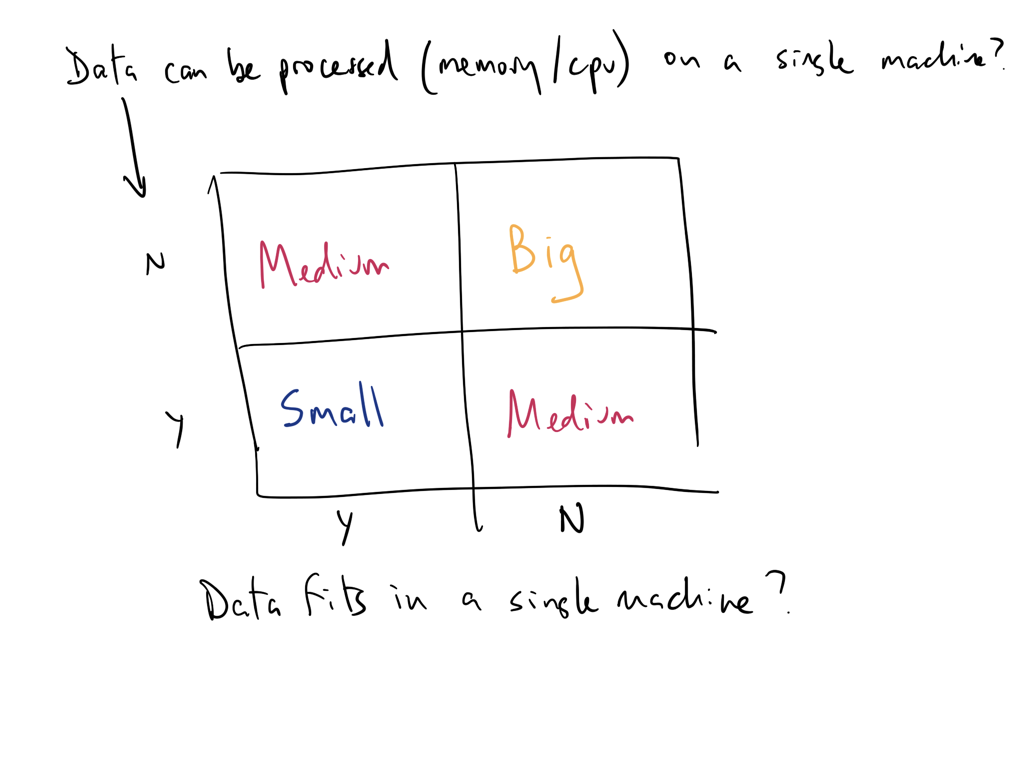

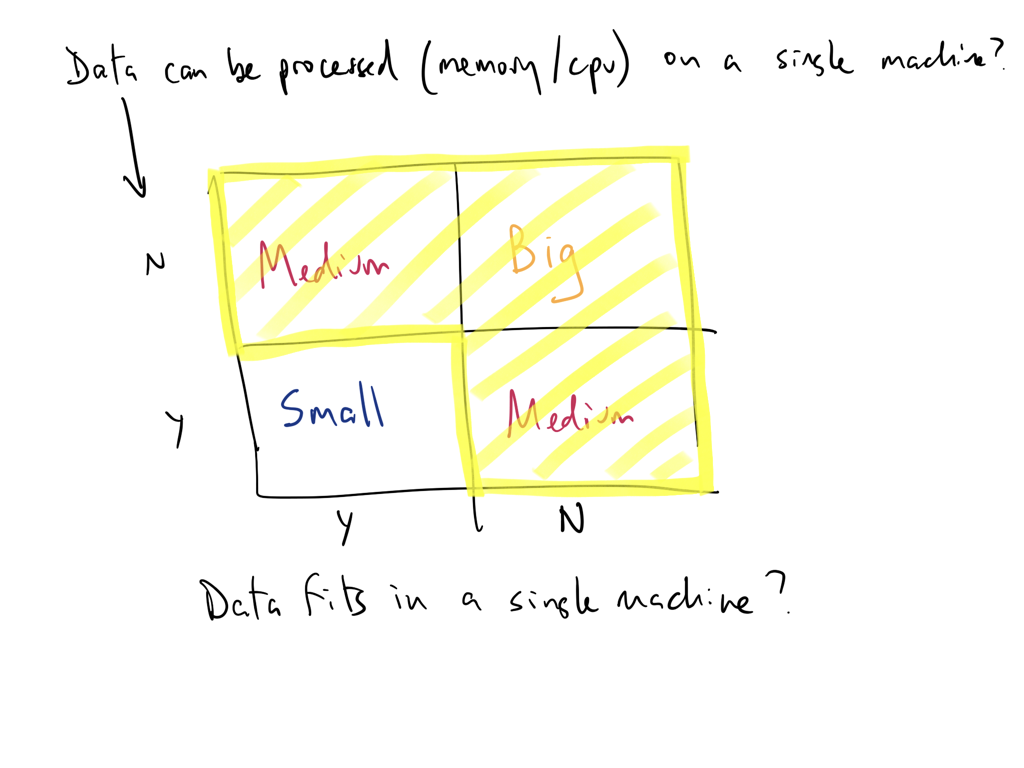

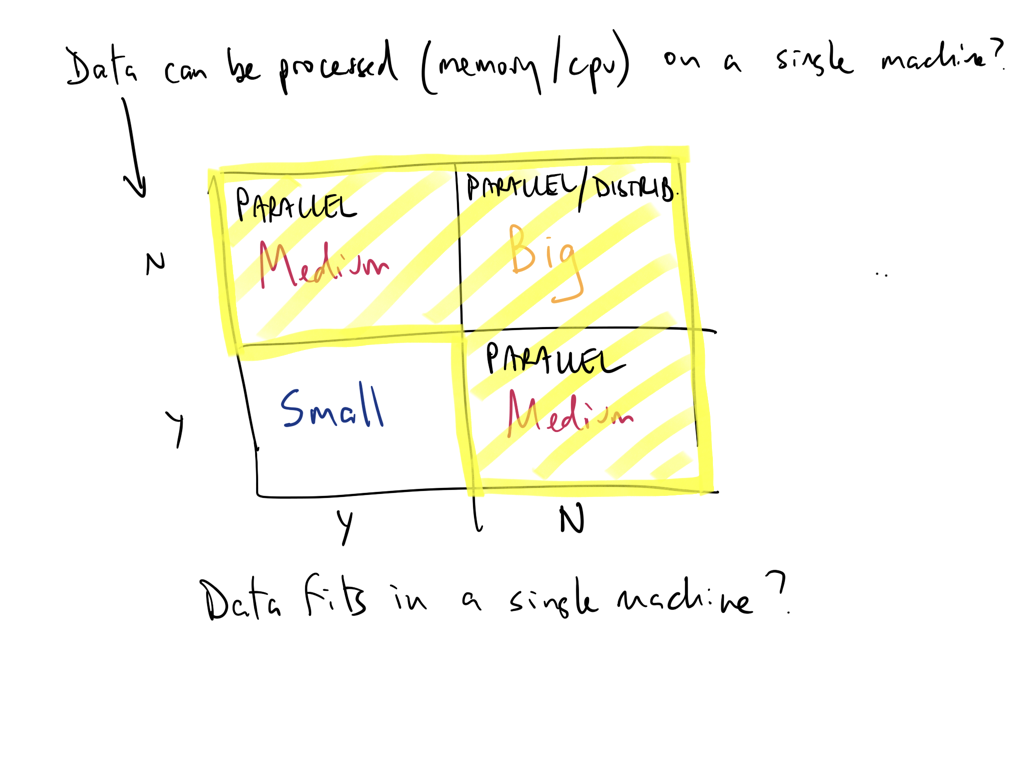

Think of data size as a function of processing and storage

Can you analyze/process your data on a single machine?

Can you store (or is it stored) on a single machine?

If any of of the answers is no then you have a big-ish data problem!

We live in a medium sized data world

The definitition of “big data” is relative!

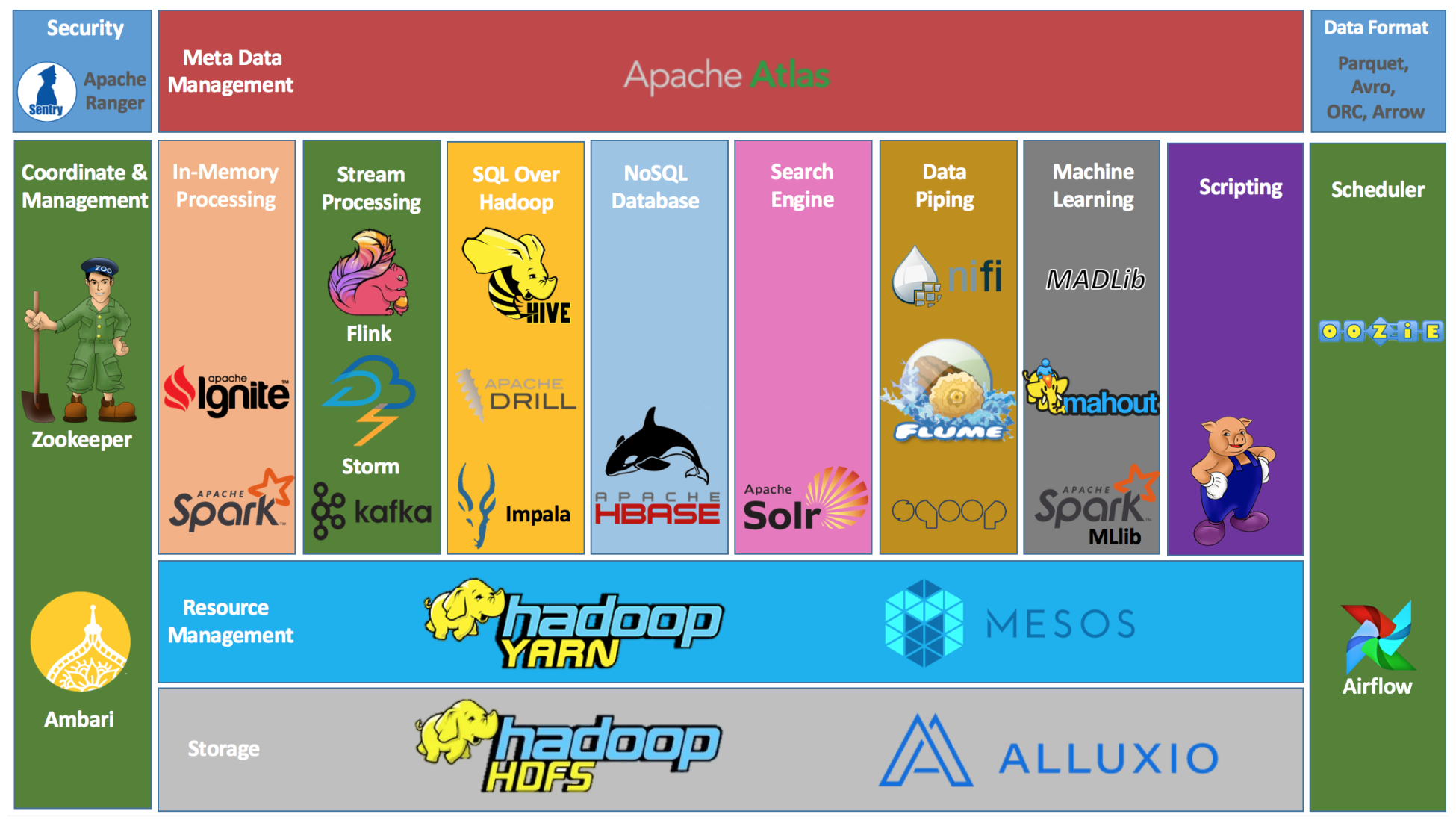

Tools at-a-glance

Languages, libraries, and projects

We’ll talk briefly about Apache Hadoop today but we will not cover it in this course.

Cloud Services

- Amazon Web Services (AWS)

- Azure

Other:

Additional links of interest

Matt Turck’s Machine Learning, Artificial Intelligence & Data Landscape (MAD)

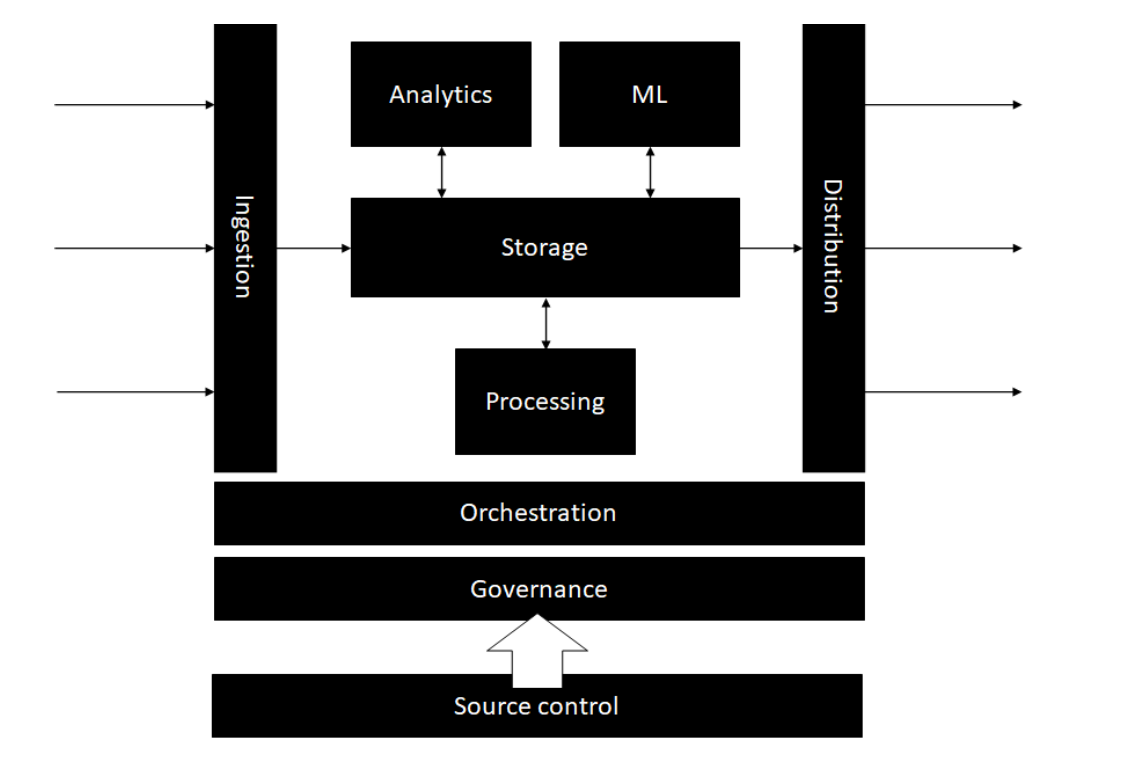

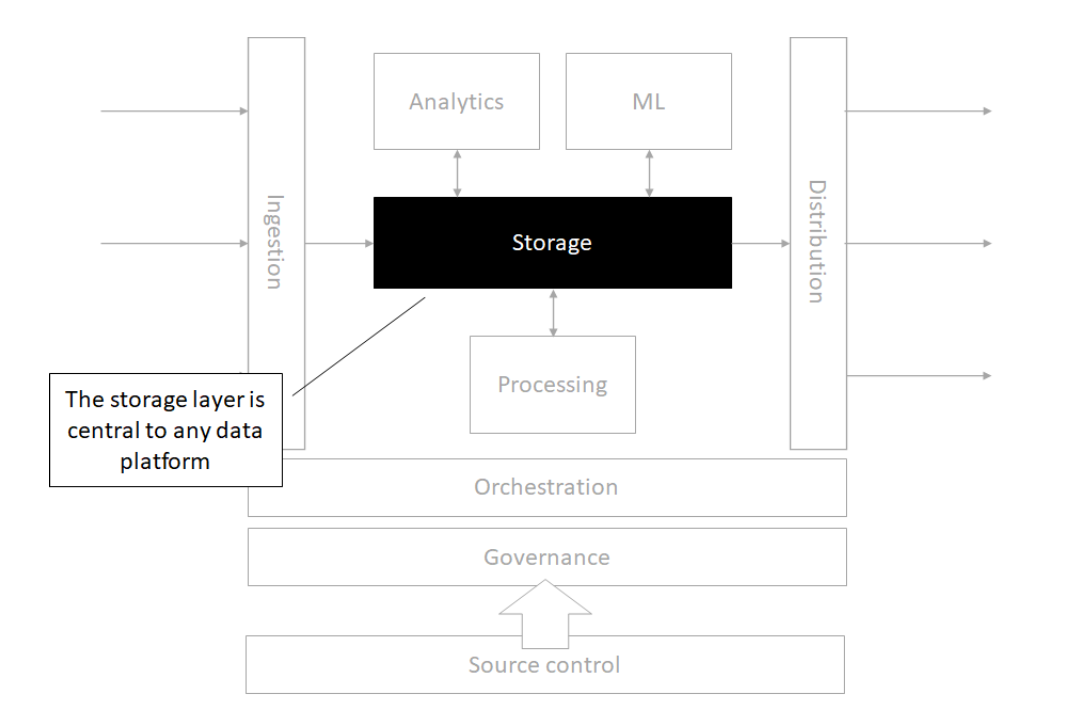

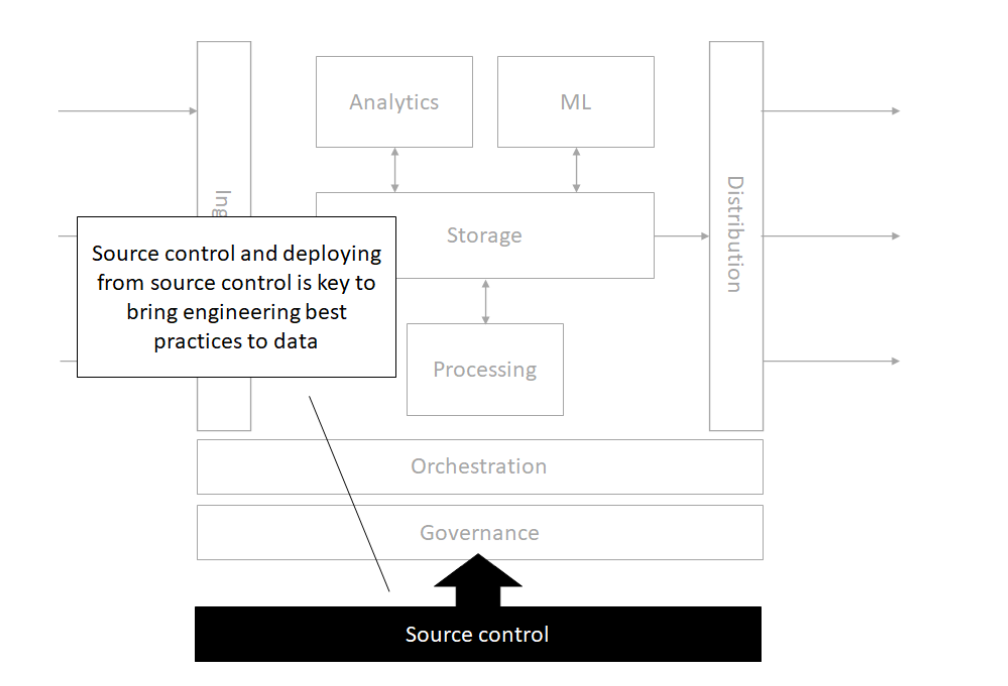

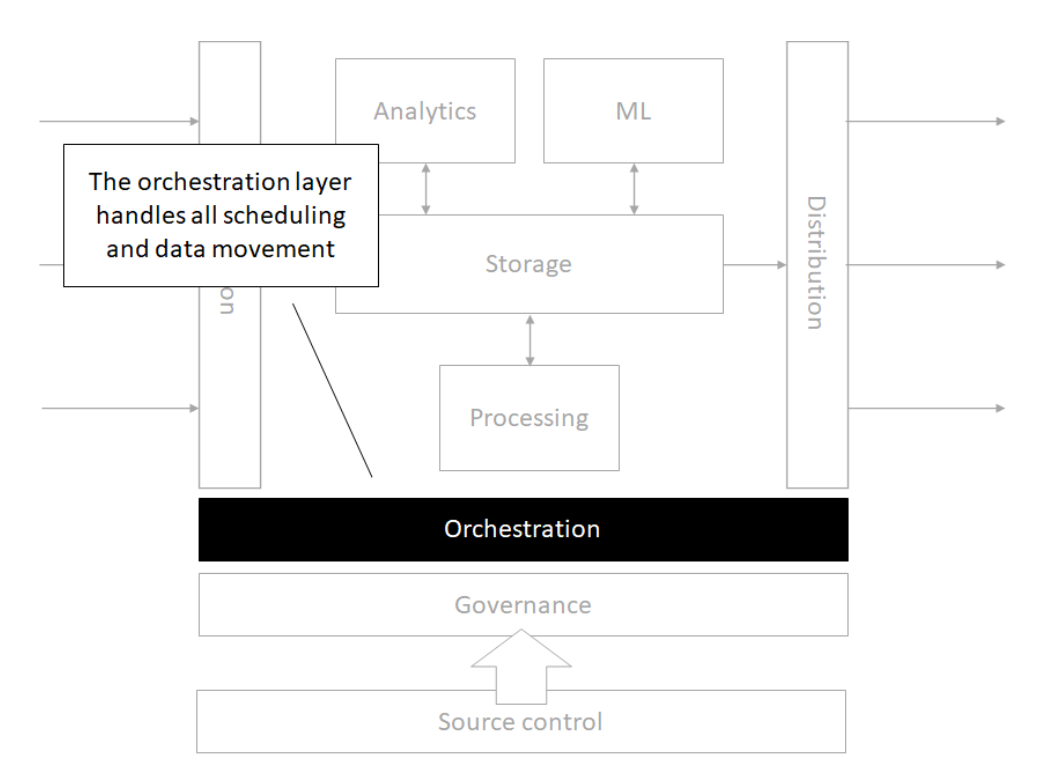

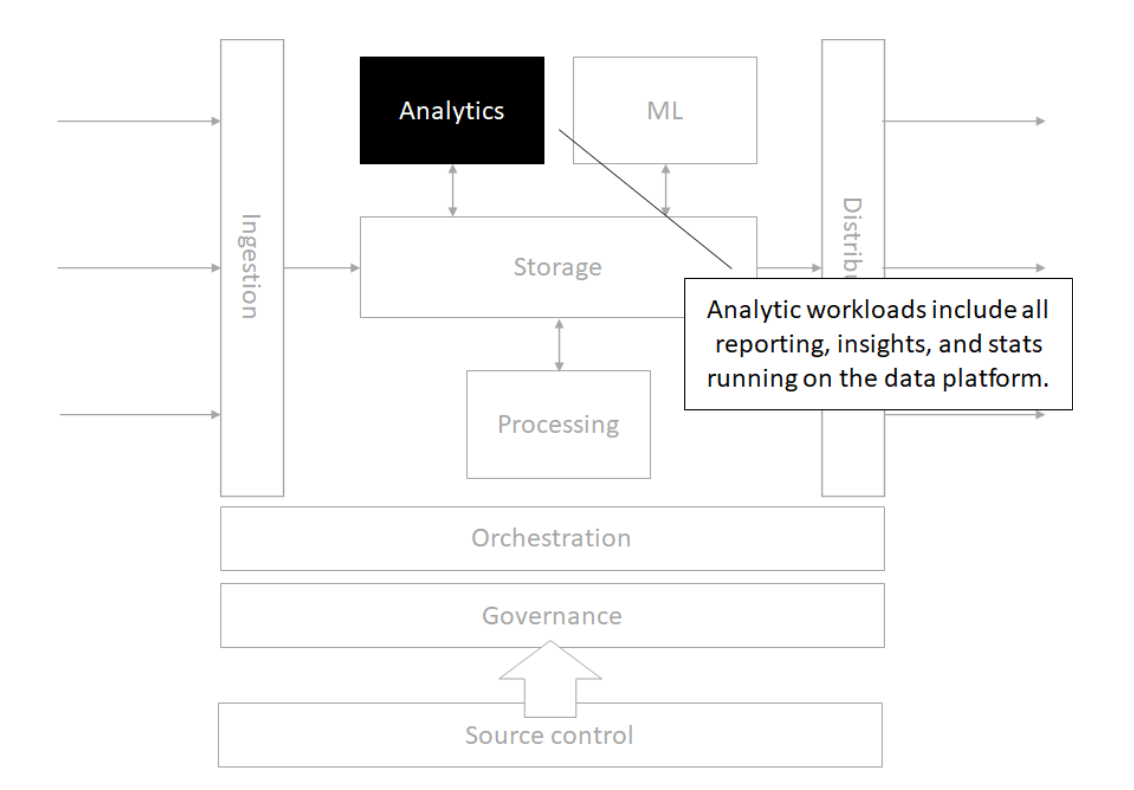

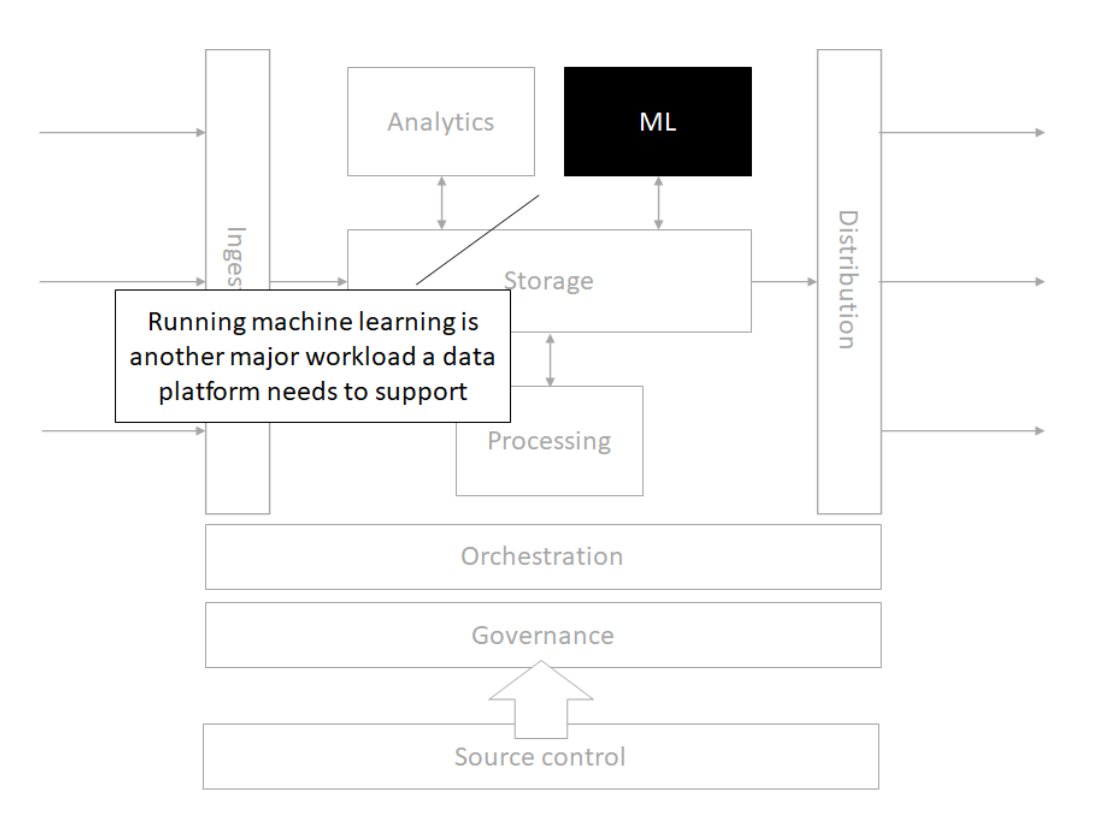

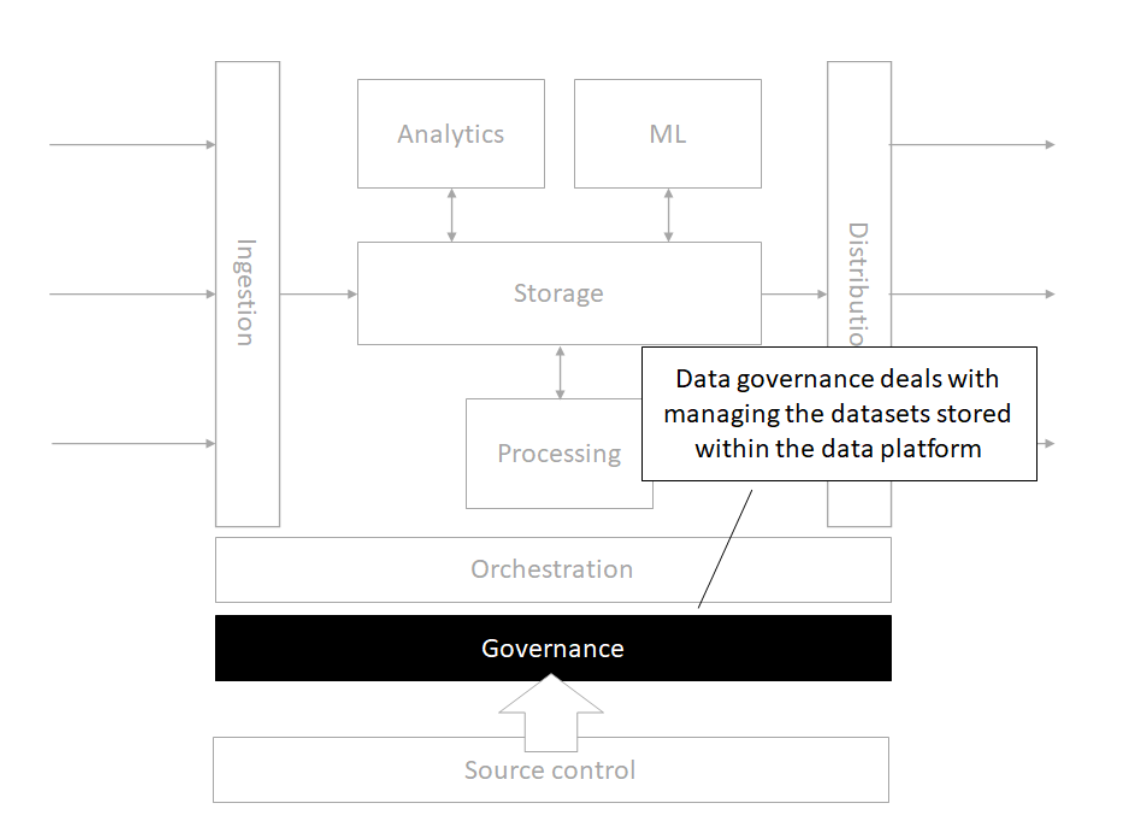

The modern data architecture

Architecture

Storage

Source control

Orchestration

Processing

Analytics

Machine Learning

Governance

Distributed computing

Yesterday’s hardware for big data processing

The 1990’s solution

One big box, all processors share memory

This was:

- Very expensive

- Low volume

It was all premium hardware. And yet is still was not big enough!

Enter commodity hardware!

Consumer-grade hardware

Not expensive, premium nor fancy in any way

Desktop-like servers are cheap, so buy a lot!

- Easy to add capacity

- Cheaper per CPU/disk

But

Needed more complex software to be able to run on lots of smaller/cheaper machines.

Problems with commodity hardware

Failures

- 1-5% hard drives/year

- 0.2% DIMMs/year

Network speed vs. shared memory

- Much more latency

- Network slower than storage

Uneven performance

Big data processing systems were built on average machines (commodoty hardware) where components failed pretty often!

Hang on to this thought!

Meet Doug Cutting

- In 1997, Doug Cutting started writing the first version of Lucene (a full text search library).

- In 2001, Lucene moves to the Apache Software Foundation, and Mike Cafarella joins Doug and create a Lucene subproject called Nutch, a web-crawler. Nutch uses Lucene to index the contents of a web page as it crawls it.

- Nutch and Lucene were deployed on a single machine (single core processor, 1GB RAM, 8 HDDs ~ 1TB), achieved decent performance, but they needed something that would be scalable enough to be able to index the web.

Doug and Mike set out to to improve Nutch

They needed a to build some kind of distributed storage layer to be the foundation of a scalable system. The came up with these requirements:

- Schemaless

- Durable

- Capable of handling component failure

- Automatically rebalanced

Does this sound familiar?

In 2003 and 2004 Google publishes two seminal papers

The Google File System (GFS) Paper

Describes how Google stored its information, at scale, using a reliable and high-available storage system can be built on commodity machines considering that failures are the norm rather than the exception.

GFS is:

- optimized for special application environment

- fault tolerance is built in

- centralized metadata management

- simplify the operation semantics

- decouple I/O and metadata operations

The Google MapReduce Paper

Describes how Google processes data, at scale using MapReduce, a paradigm based on functional programming. MapReduce is an approach and infrastructure for doing things at scale. MapReduce is two things:

- A data processing model named MapReduce

- A distributed, large scale data processing paradigm.

Which provides the following benefits:

- Moves the computation to the data

- Automatic parallelization and distribution

- Fault tolerance

- I/O scheduling

- Integrated status and monitoring

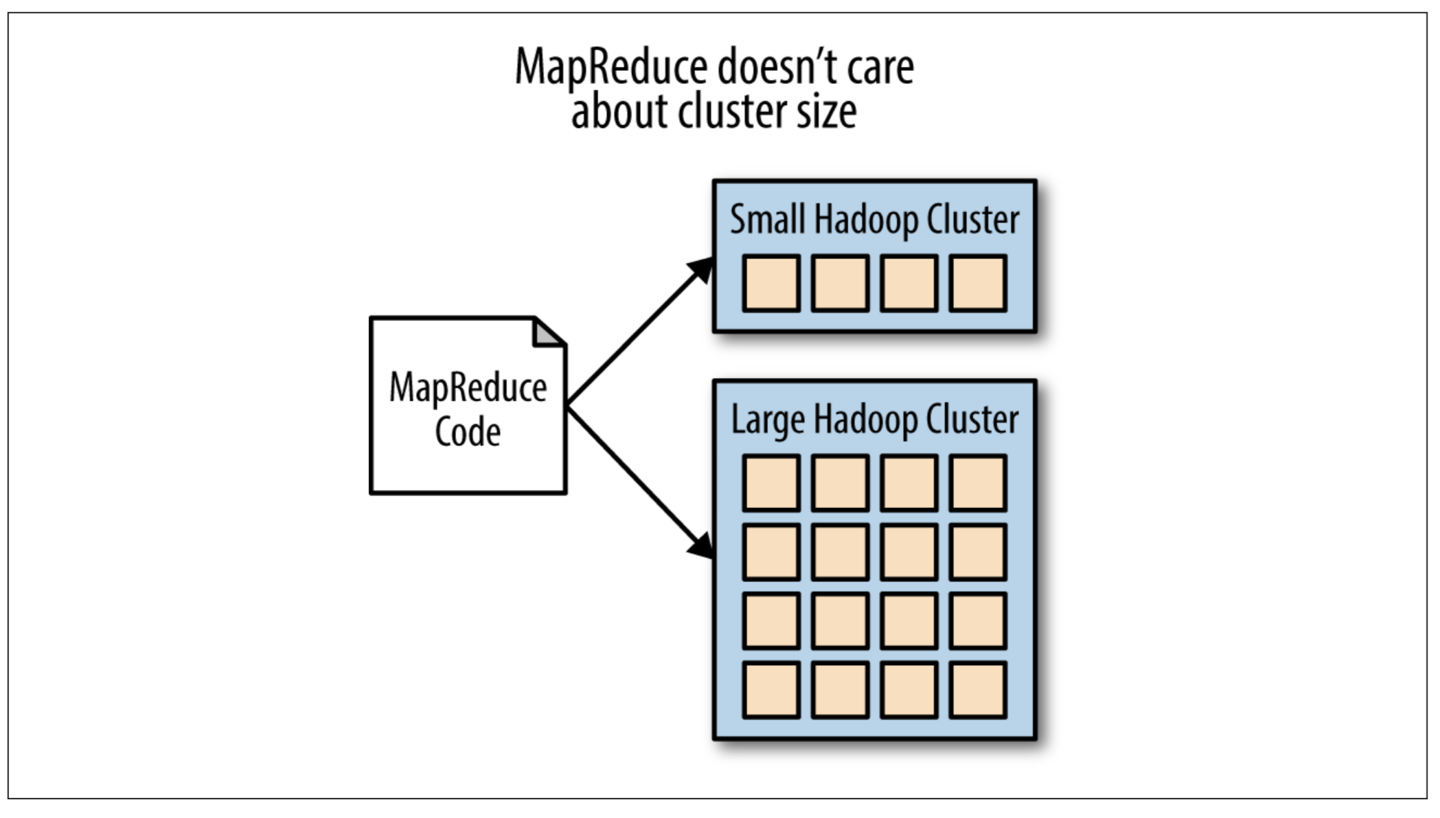

The MapReduce model and advantages

The model

- Map function takes an input pair and produces a set of intermediate key/value pairs. The MapReduce library groups together all intermediate values associated with the same intermediate key and passes them to the Reduce function.

- Reduce function accepts an intermediate key and a set of values for that key. It merges together these values to form a possibly smaller set of values. Typically just zero or one output value is produced per Reduce invocation.

The advangages

- The model is relatively easy to use, even for programmers without experience with parallel and distributed systems since it handles parallelization, fault-tolerance, locality optimization, and load balancing.

- A large variety of problems are easily expressible as MapReduce computations.

- This paper developed an implementation of MapReduce that scales to large clusters of machines comprising thousands of machines.

The genesis of Hadoop: an implementation of Google’s ideas

- Using the Google papers as a specification, they started implementing the ideas in Java in 2004 and created the Nutch Distributed File System (NDFS).

- The main purpose of this file system was to abstract the cluster’s storage so that it presents itself as a single, reliable, file system.

- Another first class featuer of the system was its ability to handle failures without operator interventions and it can run on inexpensive, commodity hardware components

- In 2006, Hadoop was created by moving NDFS (which becamie HDFS) and the MapReduce implementation out of Lucene. Hadoop 1.0 was released. 1.8TB of data sorts in 188 nodes in 48hours. Doug Cutting goes to work at Yahoo!

- In 2007, LinkedIn, Twitter and Facebook started adopting this new tool and contributing back to the project. Yahoo! is running their first production Hadoop cluster with 1,000 machines.

- In 2008, Yahoo! is running a 10,000 core cluster. World record created for fastest sorting of 1Tb in 209 seconds using a 910 node cluster. Cloudera is formed.

- In 2009, Yahoo!’s cluster is 24,000 cores and claims to sort 1TB in 62 seconds. Amazon introduces Elastic MapReduce. HDFS and MapReduce become their own separate sub-projects.

- In 2010, Yahoo!’s cluster is 4,000 and ~70PB, Facebook’s is 2,300 nodes and ~40PB.

- In 2011, Yahoo!’s cluster is 42,000 nodes

- In 2017, Twitter Hadoop cluster has over 500 Petabytes of data. Biggest cluster over 10k nodes. Read more here

Trivia question: why is it named Hadoop?

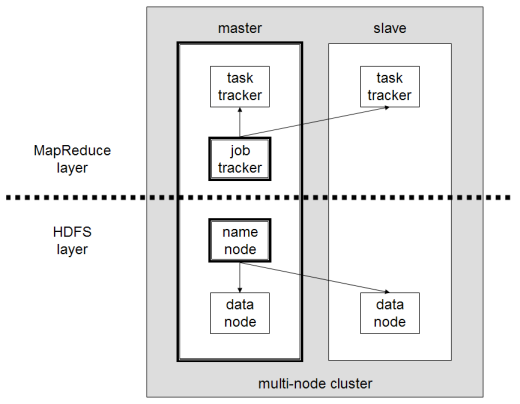

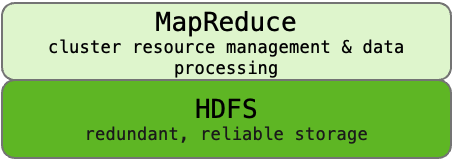

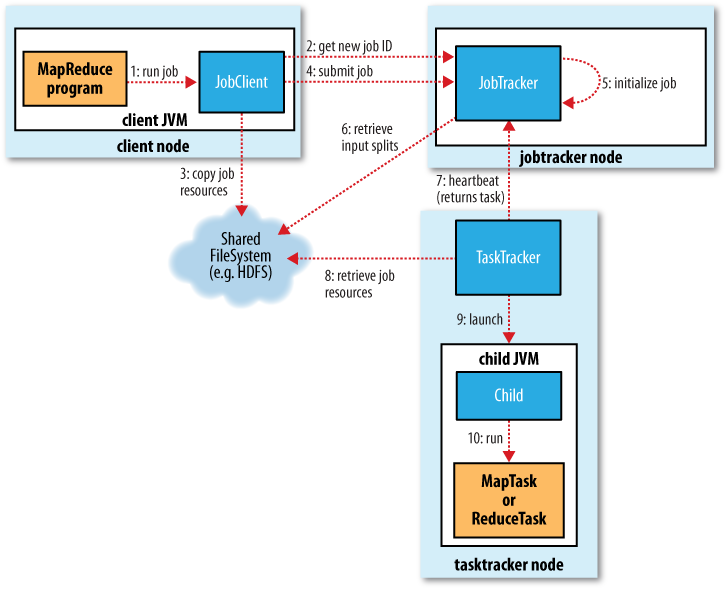

What was Hadoop 1.0: MapReduce Engine and HDFS

MapReduce performs the computations and manages cluster

- The Job Tracker is the master planner

- The Task Tracker runs each task

HDFS stores the data

Hadoop 1.0 Problems

- Monolithic

- MapReduce had too many responsibilites

- Assigning cluster resources

- Managing job execution

- Doing data processing

- Interfacing with client applications

- Only supported MapReduce

- Had a single NameNode to manage the cluster (single point of failure)

- Batch oriented and extremely inefficient with iterative queries

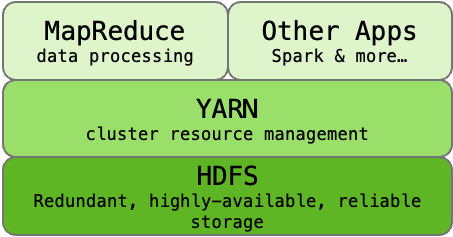

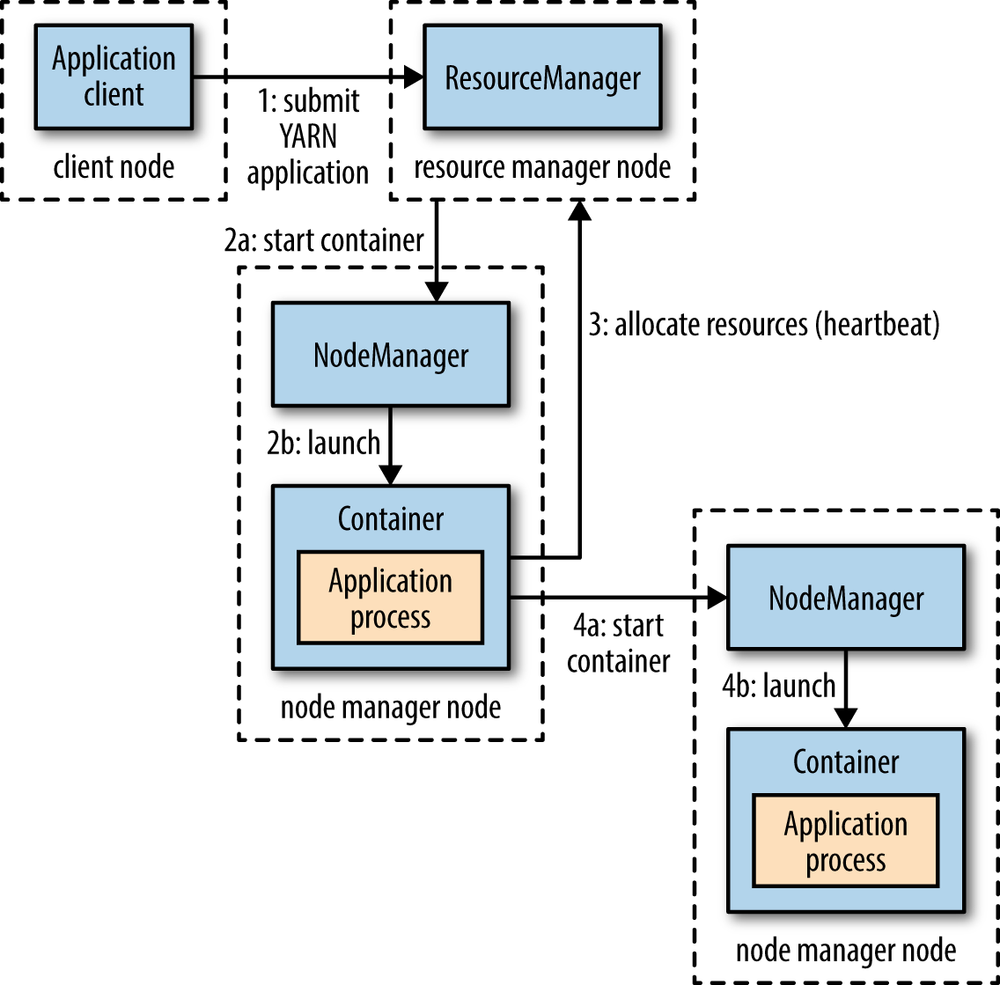

YARN & Hadoop 2.0

- Hadoop 2.0 was released in 2013

- Cluster management capability gets pulled out of MapReduce and becomes YARN (Yet Anothe Resource Negotiator), decoupling cluster operations from data pipeline

- Allowed for other applications to run on a cluster

A MapReduce Job (1.0)

A MapReduce Job (2.0+)

How YARN manages the Cluster

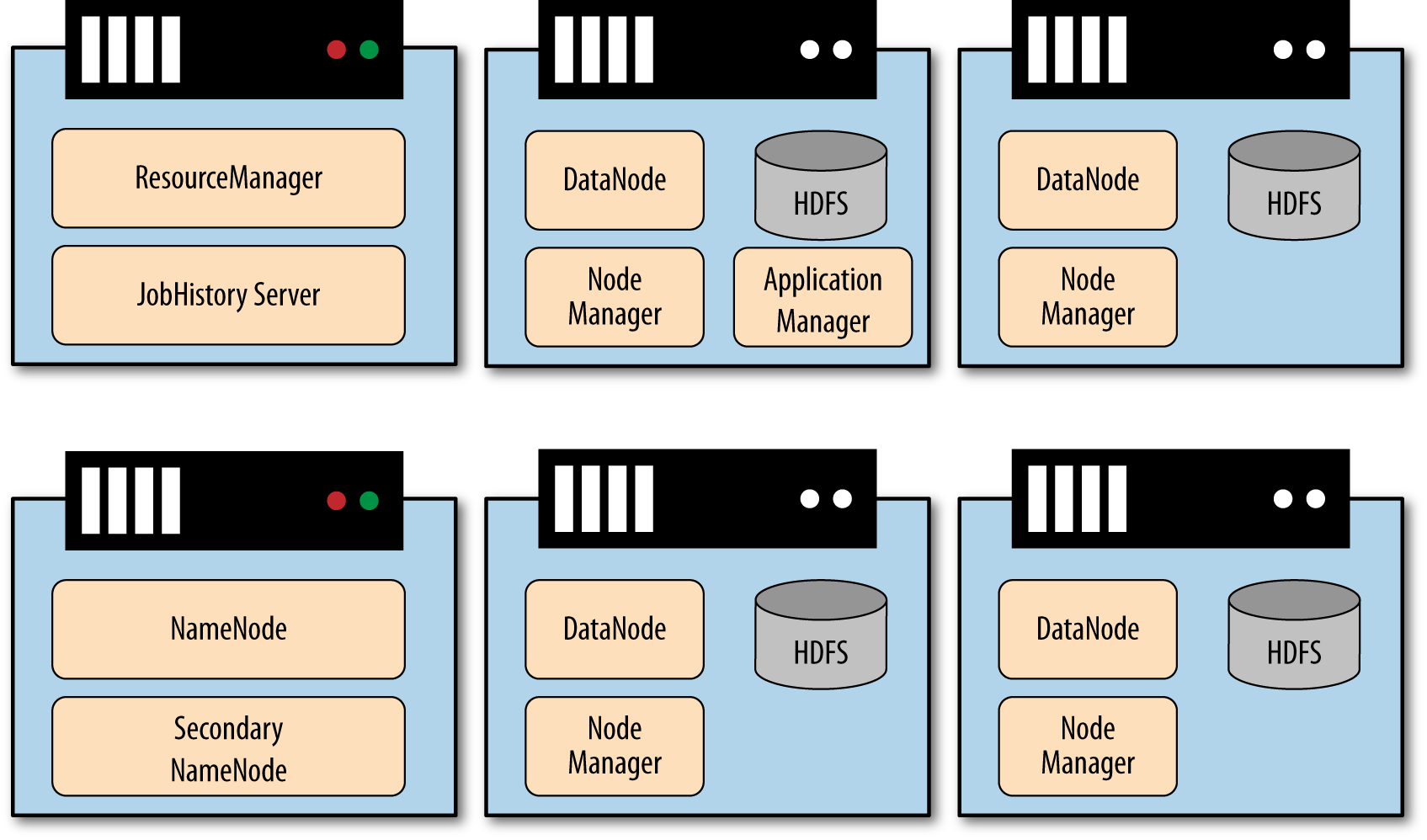

The Hadoop Architecture is Scalable

Hadoop 3.0 (Released 2017)

- Supports multiple standby NameNodes.

- Supports multiple NameNodes for multiple namespaces.

- Storage overhead reduced from 200% to 50%

- Supports GPUs

- Supports for Microsoft Azure Data Lake and Aliyun Object Storage System file-system connectors

- Rewrite of Hadoop Shell

- MapReduce task Level Native Optimization.

- Introduces more powerful YARN

The Current Hadoop Ecosystem: https://hadoopecosystemtable.github.io/

Running and scaling distrubuted computations and clusters is so much easier today!

Yesteryear (2005-2020)

- On premise hardware (compute and storage)

- Tools and platforms had to be manually deployed and configured

- Hard to immediately scale

- If using the cloud, you had to upload the data

Today (2020 +)

- Cloud first approach

- The data is likely already accessible online (you don’t need to upload it) in some kind of storage platform

- Services use high-level abstractions

- Plugins and IDEs make it easier

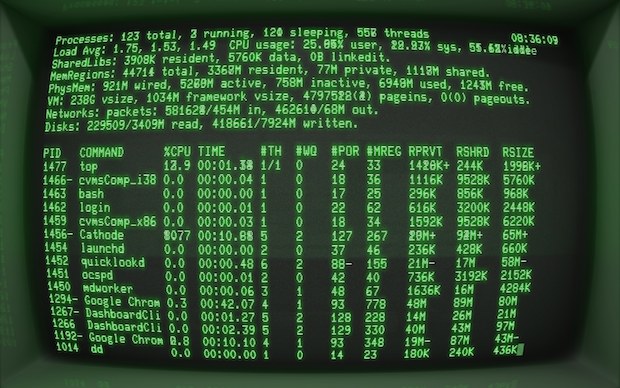

Linux Command Line

Terminal

- Terminal access was THE ONLY way to do programming

- No GUIs! No Spyder, Jupyter, RStudio, etc.

- Coding is still more powerful than graphical interfaces for complex jobs

- Coding makes work repeatable

BASH

- Created in 1989 by Brian Fox

- Brian Fox also built the first online interactive banking software

- BASH is a command processor

- Connection between you and the machine language and hardware

The Prompt

username@hostname:current_directory $

What do we learn from the prompt?

- Who you are - username

- The machine where your code is running - hostname

- The directory where your code is running - current_directory

- The shell type - $ - this symbol means BASH

Syntax

COMMAND -F --FLAG

- COMMAND is the program

- Everything after that are arguments

- F is a single letter flag

- FLAG is a single word or words connected by dashes flag. A space breaks things into a new argument.

- Sometimes single letter and long form flags (e.g. F and FLAG) can refer to the same argument

COMMAND -F --FILE file1

Here we pass an text argument “file1” into the FILE flag

The -h flag is usually to get help. You can also run the man command and pass the name of the program as the argument to get the help page.

Let’s try basic commands:

dateto get the current datewhoamito get your user nameecho "Hello World"to print to the console

Examining Files

Find out your Present Working Directory pwd

Examine the contents of files and folders using the ls command

Make new files from scratch using the touch command

Globbing - how to select files in a general way

\*for wild card any number of characters\?for wild card for a single character[]for one of many character options!for exclusion- special options

[:alpha:],[:alnum:],[:digit:],[:lower:],[:upper:]

Navigating Directories

Knowing where your terminal is executing code ensures you are working with the right inputs and making the right outputs.

Use the command pwd to determine the Present Working Directory.

Let’s say you need to change to a folder called “git-repo”. To change directories you can use a command like cd git-repo.

.refers to the current directory, such as./git-repo..can be used to move up one folder, usecd .., and can be combined to move up multiple levels../../my_folder/is the root of the Linux OS, where there are core folders, such as system, users, etc.~is the home directory. Move to folders referenced relative to this path by including it at the start of your path, for example~/projects.

To view the structure of directories from your present working directory, use the tree command

Interacting with Files

Now that we know how to navigate through directories, we need to learn the commands for interacting with files

mvto move files from one location to another- Can use file globbing here - ?, *, [], …

cpto copy files instead of moving- Can use file globbing here - ?, *, [], …

mkdirto make a directoryrmto remove filesrmdirto remove directoriesrm -rfto blast everything! WARNING!!! DO NOT USE UNLESS YOU KNOW WHAT YOU ARE DOING

Using BASH for Data Exploration

Commands:

head FILENAME/tail FILENAME- glimpsing the first / last few rows of datamore FILENAME/less FILENAME- viewing the data with basic up / (up & down) controlscat FILENAME- print entire file contents into terminalvim FILENAME- open (or edit!) the file in vim editorgrep FILENAME- search for lines within a file that match a regex expressionwc FILENAME- count the number of lines (-lflag) or number of words (-wflag)

Pipes and Arrows

|sends the stdout to another command (is the most powerful symbol in BASH!)>sends stdout to a file and overwrites anything that was there before>>appends the stdout to the end of a file (or starts a new file from scratch if one does not exist yet)<sends stdin into the command on the left

To-dos:

echo Hello World- Counting rows of data with certain attributes

Alias and User Files

.bashrc is where your shell settings are located

If we wanted a shortcut to find out the number of our running processes, we would write a commmand like whoami | xargs ps -u | wc -l.

We don’t want to write out this full command every time! Let’s make an alias.

alias alias_name="command_to_run"

alias nproc="whoami | xargs ps -u | wc -l"

Now we need to put this alias into the .bashrc

alias nproc="whoami | xargs ps -u | wc -l" >> ~/.bashrc

What happened??

echo alias nproc="whoami | xargs ps -u | wc -l" >> ~/.bashrc

Your commands get saved in ~/.bash_history

Process Managment

Use the command ps to see your running processes.

Use the command top or even better htop to see all the running processes on the machine.

Install the program htop using the command sudo yum install htop -y

Find the process ID (PID) so you can kill a broken process.

Use the command kill [PID NUM] to signal the process to terminate. If things get really bad, then use the command kill -9 [PID NUM]

To kill a command in the terminal window it is running in, try using Ctrl + C or Ctrl + /

Run the cat command on its own to let it stay open. Now open a new terminal to examine the processes and find the cat process.

Try playing a Linux game!

Bash crawl is a game to help you practice your navigation and file access skills. Click on the binder link in this repo to launch a jupyter lab session and explore!

Time for Lab!

DSAN 6000 | Fall 2023 | https://gu-dsan.github.io/6000-fall-2023/